# K8S 持久化存储 NFS+StorageClass

# 1. 搭建 NFS 服务器

#所有 K8S 节点安装 nfs-utils | |

[root@k8s-node02 ~]# yum install nfs-utils -y | |

#K8S-node02 节点配置 nfs 服务 | |

[root@k8s-node02 ~]# mkdir /data/nfs -p | |

[root@k8s-node02 ~]# cat /etc/exports | |

/data/nfs 192.168.1.0/24(rw,no_root_squash) | |

[root@k8s-node02 ~]# exportfs -arv #NFS 配置生效 | |

[root@k8s-node02 ~]# systemctl start nfs-server && systemctl enable nfs-server && systemctl status nfs-server |

# 2. 创建 RBAC

[root@k8s-node02 ~]# cat 01-rbac.yaml | |

apiVersion: v1 | |

kind: ServiceAccount | |

metadata: | |

name: nfs-client-provisioner | |

# replace with namespace where provisioner is deployed | |

namespace: default | |

--- | |

kind: ClusterRole | |

apiVersion: rbac.authorization.k8s.io/v1 | |

metadata: | |

name: nfs-client-provisioner-runner | |

rules: | |

- apiGroups: [""] | |

resources: ["nodes"] | |

verbs: ["get", "list", "watch"] | |

- apiGroups: [""] | |

resources: ["persistentvolumes"] | |

verbs: ["get", "list", "watch", "create", "delete"] | |

- apiGroups: [""] | |

resources: ["persistentvolumeclaims"] | |

verbs: ["get", "list", "watch", "update"] | |

- apiGroups: ["storage.k8s.io"] | |

resources: ["storageclasses"] | |

verbs: ["get", "list", "watch"] | |

- apiGroups: [""] | |

resources: ["events"] | |

verbs: ["create", "update", "patch"] | |

--- | |

kind: ClusterRoleBinding | |

apiVersion: rbac.authorization.k8s.io/v1 | |

metadata: | |

name: run-nfs-client-provisioner | |

subjects: | |

- kind: ServiceAccount | |

name: nfs-client-provisioner | |

# replace with namespace where provisioner is deployed | |

namespace: default | |

roleRef: | |

kind: ClusterRole | |

name: nfs-client-provisioner-runner | |

apiGroup: rbac.authorization.k8s.io | |

--- | |

kind: Role | |

apiVersion: rbac.authorization.k8s.io/v1 | |

metadata: | |

name: leader-locking-nfs-client-provisioner | |

# replace with namespace where provisioner is deployed | |

namespace: default | |

rules: | |

- apiGroups: [""] | |

resources: ["endpoints"] | |

verbs: ["get", "list", "watch", "create", "update", "patch"] | |

--- | |

kind: RoleBinding | |

apiVersion: rbac.authorization.k8s.io/v1 | |

metadata: | |

name: leader-locking-nfs-client-provisioner | |

# replace with namespace where provisioner is deployed | |

namespace: default | |

subjects: | |

- kind: ServiceAccount | |

name: nfs-client-provisioner | |

# replace with namespace where provisioner is deployed | |

namespace: default | |

roleRef: | |

kind: Role | |

name: leader-locking-nfs-client-provisioner | |

apiGroup: rbac.authorization.k8s.io | |

[root@k8s-master01 ~]# kubectl apply -f 01-rbac.yaml | |

serviceaccount/nfs-client-provisioner created | |

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created | |

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created | |

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created | |

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created |

# 3. 创建 nfs-provisioner

[root@k8s-master01 ~]# cat 02-nfs-provisioner.yaml | |

apiVersion: apps/v1 | |

kind: Deployment | |

metadata: | |

name: nfs-client-provisioner | |

labels: | |

app: nfs-client-provisioner | |

# replace with namespace where provisioner is deployed | |

namespace: default | |

spec: | |

replicas: 1 | |

strategy: | |

type: Recreate | |

selector: | |

matchLabels: | |

app: nfs-client-provisioner | |

template: | |

metadata: | |

labels: | |

app: nfs-client-provisioner | |

spec: | |

serviceAccountName: nfs-client-provisioner | |

containers: | |

- name: nfs-client-provisioner | |

image: registry.cn-hangzhou.aliyuncs.com/old_xu/nfs-subdir-external-provisioner:v4.0.2 | |

volumeMounts: | |

- name: nfs-client-root | |

mountPath: /persistentvolumes | |

env: | |

- name: PROVISIONER_NAME # nfs-provisioner 的名称,后续 storageClass 要与该名称一致 | |

value: nfzl.com/nfs | |

- name: NFS_SERVER # NFS 服务的 IP 地址 | |

value: 192.168.1.75 | |

- name: NFS_PATH # NFS 服务共享的路径 | |

value: /data/nfs | |

volumes: | |

- name: nfs-client-root | |

nfs: | |

server: 192.168.1.75 | |

path: /data/nfs | |

[root@k8s-master01 ~]# kubectl apply -f 02-nfs-provisioner.yaml | |

[root@k8s-master01 ~]# kubectl get pods | |

NAME READY STATUS RESTARTS AGE | |

nfs-client-provisioner-6bcc4587f8-zp8qc 1/1 Running 0 17s |

# 4. 创建 StorageClass

[root@k8s-master01 ~]# cat 03-storageClass.yaml | |

apiVersion: storage.k8s.io/v1 | |

kind: StorageClass | |

metadata: | |

name: nfs-storage # pvc 申请时需明确指定的 storageClass 名称 | |

provisioner: nfzl.com/nfs # 供应商名称,必须和上面创建的 "PROVISIONER_NAME" 变量值致 | |

parameters: | |

archiveOnDelete: "false" # 如果值为 false,删除 PVC 后也会删除目录内容,"true" 则会对数据进行保留 |

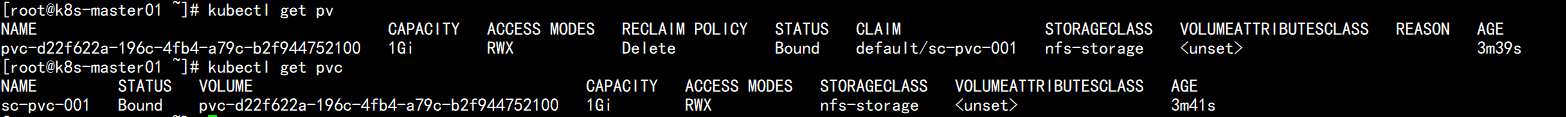

# 5. 创建 PVC

[root@k8s-master01 ~]# cat 04-nginx-pvc.yaml | |

apiVersion: v1 | |

kind: PersistentVolumeClaim | |

metadata: | |

name: sc-pvc-001 | |

spec: | |

storageClassName: "nfs-storage" # 明确指定使用哪个 sc 的供应商来创建 pv | |

accessModes: | |

- ReadWriteMany | |

resources: | |

requests: | |

storage: 1Gi # 根据业务实际大小进行资源申请 | |

[root@k8s-master01 ~]# kubectl apply -f 04-nginx-pvc.yaml |

# 6. 挂载 PVC 测试

[root@k8s-master01 ~]# cat 05-nginx-pod.yaml | |

apiVersion: v1 | |

kind: Pod | |

metadata: | |

name: nginx-sc-001 | |

spec: | |

containers: | |

- name: nginx-sc-001 | |

image: nginx | |

volumeMounts: | |

- name: nginx-page | |

mountPath: /usr/share/nginx/html | |

volumes: | |

- name: nginx-page | |

persistentVolumeClaim: | |

claimName: sc-pvc-001 | |

[root@k8s-master01 ~]# kubectl apply -f 05-nginx-pod.yaml | |

[root@k8s-master01 ~]# kubectl get pods -o wide | |

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES | |

nginx-sc-001 1/1 Running 0 15s 172.16.85.244 k8s-node01 <none> <none> | |

[root@k8s-master01 ~]# curl 172.16.85.244 | |

hello world |