# K8S 云原生存储 Rook-Ceph

# 1. StorageClass 动态存储

StorageClass:存储类,由 K8s 管理员创建,用于动态 PV 的管理,可以链接至不同的后端存储,比如 Ceph、GlusterFS 等。之后对存储的请求可以指向 StorageClass,然后 StorageClass 会自动的创建、删除 PV。

实现方式:

- in-tree: 内置于 K8s 核心代码,对于存储的管理,都需要编写相应的代码。

- out-of-tree:由存储厂商提供一个驱动(CSI 或 Flex Volume),安装到 K8s 集群,然后 StorageClass 只需要配置该驱动即可,驱动器会代替 StorageClass 管理存储。

StorageClass 官网介绍:https://kubernetes.io/docs/concepts/storage/storage-classes/

# 2. 云原生存储 Rook

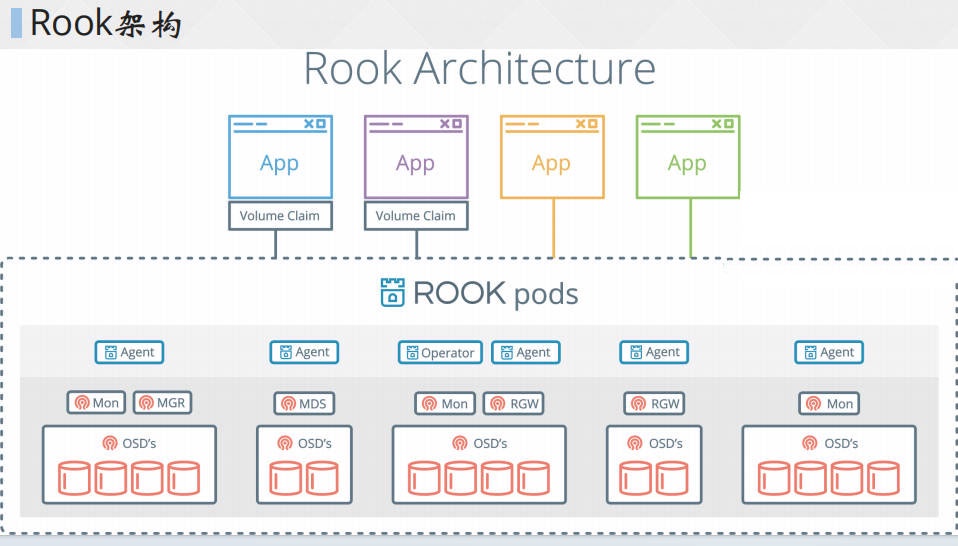

Rook 是一个自我管理的分布式存储编排系统,它本身并不是存储系统,在存储和 k8s 之前搭建了一个桥梁,使存储系统的搭建或者维护变得特别简单,Rook 将分布式存储系统转变为自我管理、自我扩展、自我修复的存储服务。它让一些存储的操作,比如部署、配置、扩容、升级、迁移、灾难恢复、监视和资源管理变得自动化,无需人工处理。并且 Rook 支持 CSI,可以利用 CSI 做一些 PVC 的快照、扩容、克隆等操作。

Rook 官网介绍:https://rook.io/

# 3. Rook 安装

环境准备

- K8s 集群至少五个节点,每个节点的内存不低于 5G,CPU 不低于 2 核

- 所有节点时间同步

- 至少有三个存储节点,并且每个节点至少有一个裸盘,k8s-master03、k8s-node01、k8s-node02 增加裸盘

# 3.1 下载 Rook 安装文件

[root@k8s-master01 ~]# git clone --single-branch --branch v1.17.2 https://github.com/rook/rook.git

# 3.2 配置更改

[root@k8s-master01 ~]# cd rook/deploy/examples | |

[root@k8s-master01 ~]# vim operator.yaml | |

ROOK_CSI_CEPH_IMAGE: "registry.cn-hangzhou.aliyuncs.com/kubernetes_public/cephcsi:v3.14.0" | |

ROOK_CSI_REGISTRAR_IMAGE: "registry.cn-hangzhou.aliyuncs.com/kubernetes_public/csi-node-driver-registrar:v2.13.0" | |

ROOK_CSI_RESIZER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/kubernetes_public/csi-resizer:v1.13.1" | |

ROOK_CSI_PROVISIONER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/kubernetes_public/csi-provisioner:v5.1.0" | |

ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/kubernetes_public/csi-snapshotter:v8.2.0" | |

ROOK_CSI_ATTACHER_IMAGE: "registry.cn-hangzhou.aliyuncs.com/kubernetes_public/csi-attacher:v4.8.0" | |

#ROOK_ENABLE_DISCOVERY_DAEMON 改成 true 即可 | |

ROOK_ENABLE_DISCOVERY_DAEMON: "true" |

# 3.3 部署 rook

[root@k8s-master01 ceph]# kubectl create -f crds.yaml -f common.yaml -f operator.yaml | |

[root@k8s-master01 examples]# kubectl get pods -n rook-ceph | |

NAME READY STATUS RESTARTS AGE | |

rook-ceph-operator-84ff77778b-7ww2w 1/1 Running 0 91m | |

rook-discover-6j68f 1/1 Running 0 82m | |

rook-discover-9w4kt 1/1 Running 0 82m | |

rook-discover-h2zfm 1/1 Running 0 82m | |

rook-discover-hsz8b 1/1 Running 0 19m | |

rook-discover-rj4t7 1/1 Running 0 82m |

# 4. 创建 Ceph 集群

# 4.1 配置更改

[root@k8s-master01 examples]# vim cluster.yaml | |

... | |

image: registry.cn-hangzhou.aliyuncs.com/kubernetes_public/cephv19.2.2:v19.2.2 | |

... | |

skipUpgradeChecks: true #改为 true,跳过升级 | |

.... | |

dashboard: | |

enabled: true | |

# serve the dashboard under a subpath (useful when you are accessing the dashboard via a reverse proxy) | |

# urlPrefix: /ceph-dashboard | |

# serve the dashboard at the given port. | |

# port: 8443 | |

# serve the dashboard using SSL | |

ssl: false #改为 false | |

... | |

storage: # cluster level storage configuration and selection | |

useAllNodes: false #改为 false, 不使用所有的节点当 osd | |

useAllDevices: false #改为 false, 不使用所有的磁盘当 osd | |

... | |

# deviceFilter: "^sd." | |

nodes: | |

- name: "k8s-master03" | |

devices: | |

- name: "sdb" | |

- name: "k8s-node01" | |

devices: | |

- name: "sdb" | |

- name: "k8s-node02" | |

devices: | |

- name: "sdb" | |

... |

注意:新版必须采用裸盘,即未格式化的磁盘。其中 k8s-master03、 k8s-node01、 k8s-node02 有新加的一个磁盘,可以通过 lsblk -f 查看新添加的磁盘名称。建议最少三个节点,否则后面的试验可能会出现问题

# 4.2 创建 Ceph 集群

[root@k8s-master01 examples]# kubectl create -f cluster.yaml | |

[root@k8s-master01 examples]# kubectl get pods -n rook-ceph | |

NAME READY STATUS RESTARTS AGE | |

csi-cephfsplugin-5nmnl 3/3 Running 1 (60m ago) 62m | |

csi-cephfsplugin-6b6ct 3/3 Running 1 (60m ago) 62m | |

csi-cephfsplugin-8xlnl 3/3 Running 1 (60m ago) 62m | |

csi-cephfsplugin-fh9w5 3/3 Running 1 (60m ago) 62m | |

csi-cephfsplugin-mslst 3/3 Running 1 (60m ago) 62m | |

csi-cephfsplugin-provisioner-59bd447c6d-5zwj2 6/6 Running 0 61s | |

csi-cephfsplugin-provisioner-59bd447c6d-7t2kg 6/6 Running 2 (20s ago) 61s | |

csi-rbdplugin-5gvmp 3/3 Running 1 (60m ago) 62m | |

csi-rbdplugin-dzcs4 3/3 Running 1 (60m ago) 62m | |

csi-rbdplugin-n82b5 3/3 Running 1 (60m ago) 62m | |

csi-rbdplugin-provisioner-6856fb8b86-86hw8 6/6 Running 0 19s | |

csi-rbdplugin-provisioner-6856fb8b86-lj9s4 6/6 Running 0 19s | |

csi-rbdplugin-vh8j2 3/3 Running 1 (60m ago) 62m | |

csi-rbdplugin-xfgwr 3/3 Running 1 (60m ago) 62m | |

rook-ceph-crashcollector-k8s-master01-bbc78d496-bzjk8 1/1 Running 0 8m26s | |

rook-ceph-crashcollector-k8s-master03-765ff964bb-95wmt 1/1 Running 0 28m | |

rook-ceph-crashcollector-k8s-node01-7cf4c4b6b6-r4n84 1/1 Running 0 20m | |

rook-ceph-crashcollector-k8s-node02-f887f8cf9-jz2l8 1/1 Running 0 28m | |

rook-ceph-detect-version-nsrwj 0/1 Init:0/1 0 3s | |

rook-ceph-exporter-k8s-master01-5cd4577b79-ckd4m 1/1 Running 0 8m26s | |

rook-ceph-exporter-k8s-master03-75f4cf6f7-hc9zb 1/1 Running 0 28m | |

rook-ceph-exporter-k8s-node01-96fc7cf49-d2r24 1/1 Running 0 20m | |

rook-ceph-exporter-k8s-node02-777b9f555b-7j6cz 1/1 Running 0 27m | |

rook-ceph-mgr-a-6f46b4b945-q6cjb 3/3 Running 3 (14m ago) 35m | |

rook-ceph-mgr-b-5d4cc5465b-8dfh6 3/3 Running 0 35m | |

rook-ceph-mon-a-7c7b7555c7-nlhwg 2/2 Running 2 (6m14s ago) 51m | |

rook-ceph-mon-c-559bcf95fd-cl62w 2/2 Running 0 8m27s | |

rook-ceph-mon-d-7dbc6b8f5c-8264t 2/2 Running 0 28m | |

rook-ceph-operator-645478ff5b-jdcrp 1/1 Running 0 102m | |

rook-ceph-osd-0-6d9cf78f76-4zhx8 2/2 Running 0 12m | |

rook-ceph-osd-1-88c78bbcb-cn48c 2/2 Running 0 5m15s | |

rook-ceph-osd-2-b464c9fc6-458hv 2/2 Running 0 4m29s | |

rook-ceph-osd-prepare-k8s-master03-pwnrc 0/1 Completed 0 86s | |

rook-ceph-osd-prepare-k8s-node01-xxp2j 0/1 Completed 0 83s | |

rook-ceph-osd-prepare-k8s-node02-8nz7x 0/1 Completed 0 78s | |

rook-discover-jzmkr 1/1 Running 0 91m | |

rook-discover-k7pxt 1/1 Running 0 91m | |

rook-discover-vqjh5 1/1 Running 0 91m | |

rook-discover-wk8jq 1/1 Running 0 91m | |

rook-discover-x8rsn 1/1 Running 0 91m | |

[root@k8s-master01 examples]# kubectl get cephcluster -n rook-ceph | |

NAME DATADIRHOSTPATH MONCOUNT AGE PHASE MESSAGE HEALTH EXTERNAL FSID | |

rook-ceph /var/lib/rook 3 63m Ready Cluster created successfully HEALTH_WARN ca429602-66f4-4a1e-9d5c-a5773a0f594f |

# 4.3 安装 ceph snapshot 控制器

[root@k8s-master01 ~]# cd /root/k8s-ha-install/ | |

[root@k8s-master01 k8s-ha-install]# git checkout manual-installation-v1.32.x | |

[root@k8s-master01 k8s-ha-install]# kubectl create -f snapshotter/ -n kube-system | |

[root@k8s-master01 k8s-ha-install]# kubectl get po -n kube-system -l app=snapshot-controller | |

NAME READY STATUS RESTARTS AGE | |

snapshot-controller-0 1/1 Running 0 67s |

# 5. 安装 ceph 客户端工具

[root@k8s-master01 k8s-ha-install]# cd /root/rook/deploy/examples/ | |

[root@k8s-master01 examples]# kubectl create -f toolbox.yaml -n rook-ceph | |

[root@k8s-master01 examples]# kubectl get po -n rook-ceph -l app=rook-ceph-tools | |

NAME READY STATUS RESTARTS AGE | |

rook-ceph-tools-7b75b967db-sqddk 1/1 Running 0 8s | |

[root@k8s-master01 examples]# kubectl exec -it rook-ceph-tools-7b75b967db-sqddk -n rook-ceph -- bash | |

bash-5.1$ ceph status | |

cluster: | |

id: 87b85368-9487-4967-a4e4-5970d2e0ec94 | |

health: HEALTH_WARN | |

1 mgr modules have recently crashed | |

services: | |

mon: 3 daemons, quorum b,c (age 12s), out of quorum: a | |

mgr: a(active, since 7m), standbys: b | |

osd: 3 osds: 3 up (since 8m), 3 in (since 3h) | |

data: | |

pools: 0 pools, 0 pgs | |

objects: 0 objects, 0 B | |

usage: 82 MiB used, 60 GiB / 60 GiB avail | |

pgs: | |

bash-4.4$ ceph osd status | |

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE | |

0 k8s-master03 20.6M 19.9G 0 0 0 0 exists,up | |

1 k8s-node01 20.6M 19.9G 0 0 0 0 exists,up | |

2 k8s-node02 20.6M 19.9G 0 0 0 0 exists,up | |

bash-4.4$ ceph df | |

--- RAW STORAGE --- | |

CLASS SIZE AVAIL USED RAW USED %RAW USED | |

hdd 60 GiB 60 GiB 62 MiB 62 MiB 0.10 | |

TOTAL 60 GiB 60 GiB 62 MiB 62 MiB 0.10 | |

--- POOLS --- | |

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL | |

.mgr 1 1 449 KiB 2 1.3 MiB 0 19 GiB |

# 6. Ceph dashboard

# 6.1 暴露服务

[root@k8s-master01 ~]# kubectl get svc -n rook-ceph | |

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE | |

rook-ceph-mgr ClusterIP 10.96.54.15 <none> 9283/TCP 133m | |

rook-ceph-mgr-dashboard ClusterIP 10.96.97.117 <none> 7000/TCP 133m #暴露 ingresss 也可 | |

rook-ceph-mon-a ClusterIP 10.96.125.216 <none> 6789/TCP,3300/TCP 170m | |

rook-ceph-mon-b ClusterIP 10.96.34.183 <none> 6789/TCP,3300/TCP 133m | |

rook-ceph-mon-c ClusterIP 10.96.232.252 <none> 6789/TCP,3300/TCP 133m | |

[root@k8s-master01 examples]# kubectl create -f dashboard-external-http.yaml #暴露 nodeport | |

[root@k8s-master01 examples]# kubectl get svc -n rook-ceph rook-ceph-mgr-dashboard-external-http | |

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE | |

rook-ceph-mgr-dashboard-external-https NodePort 10.96.11.120 <none> 8443:32611/TCP 45s |

# 6.2 配置 ingress 访问 ceph

[root@k8s-master01 examples]# cat dashboard-ingress-https.yaml | |

# | |

# This example is for Kubernetes running an nginx-ingress | |

# and an ACME (e.g. Let's Encrypt) certificate service | |

# | |

# The nginx-ingress annotations support the dashboard | |

# running using HTTPS with a self-signed certificate | |

# | |

apiVersion: networking.k8s.io/v1 | |

kind: Ingress | |

metadata: | |

name: rook-ceph-mgr-dashboard | |

namespace: rook-ceph # namespace:cluster | |

# annotations: | |

# kubernetes.io/ingress.class: "nginx" | |

# kubernetes.io/tls-acme: "true" | |

# nginx.ingress.kubernetes.io/backend-protocol: "HTTPS" | |

# nginx.ingress.kubernetes.io/server-snippet: | | |

# proxy_ssl_verify off; | |

spec: | |

ingressClassName: "nginx" | |

# tls: | |

# - hosts: | |

# - rook-ceph.hmallleasing.com | |

# secretName: rook-ceph.example.com | |

rules: | |

- host: rook-ceph.hmallleasing.com | |

http: | |

paths: | |

- path: / | |

pathType: Prefix | |

backend: | |

service: | |

name: rook-ceph-mgr-dashboard | |

port: | |

name: http-dashboard |

# 6.3 登录

http://192.168.40.100:32611 | |

用户名:admin | |

密码:kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo |

# 7. Ceph 块存储的使用

块存储一般用于一个 Pod 挂载一块存储使用,相当于一个服务器新挂了一个盘,只给一个应用使用。

# 7.1 创建 StorageClass 和 ceph 的存储池

[root@k8s-master01 examples]# kubectl get csidriver | |

NAME ATTACHREQUIRED PODINFOONMOUNT STORAGECAPACITY TOKENREQUESTS REQUIRESREPUBLISH MODES AGE | |

rook-ceph.cephfs.csi.ceph.com true false false <unset> false Persistent 15h #文件存储 csi | |

rook-ceph.rbd.csi.ceph.com true false false <unset> false Persistent 15h #块存储 csi | |

[root@k8s-master01 ~]# cd /root/rook/deploy/examples/ | |

[root@k8s-master01 examples]# vim csi/rbd/storageclass.yaml | |

... | |

apiVersion: ceph.rook.io/v1 | |

kind: CephBlockPool | |

metadata: | |

name: replicapool | |

namespace: rook-ceph # namespace:cluster | |

spec: | |

failureDomain: host | |

replicated: | |

size: 3 #数据保存几份,测试环境可以将副本数设置成了 2(不能设置为 1),生产环境最少为 3,且要小于等于 osd 的数量 | |

... | |

allowVolumeExpansion: true #是否可以扩容 | |

reclaimPolicy: Delete #pv 回收策略 | |

[root@k8s-master01 examples]# kubectl create -f csi/rbd/storageclass.yaml -n rook-ceph | |

[root@k8s-master01 examples]# kubectl get cephblockpool -n rook-ceph | |

NAME PHASE | |

replicapool Ready | |

[root@k8s-master01 examples]# kubectl get sc | |

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE | |

nfs-storage nfzl.com/nfs Delete Immediate false 16h | |

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 37s |

# 7.2 挂载测试

[root@k8s-master01 ~]# cat ceph-block-pvc.yaml #创建 PVC | |

apiVersion: v1 | |

kind: PersistentVolumeClaim | |

metadata: | |

name: ceph-block-pvc | |

spec: | |

storageClassName: "rook-ceph-block" # 明确指定使用哪个 sc 的供应商来创建 pv | |

accessModes: | |

- ReadWriteOnce | |

resources: | |

requests: | |

storage: 1Gi # 根据业务实际大小进行资源申请 | |

[root@k8s-master01 ~]# kubectl apply -f ceph-block-pvc.yaml | |

[root@k8s-master01 ~]# kubectl get pvc | |

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE | |

ceph-block-pvc Bound pvc-86c94d8d-c359-47b8-b5d3-31dcdaf86551 1Gi RWO rook-ceph-block 3s | |

[root@k8s-master01 ~]# kubectl get pv | |

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE | |

pvc-86c94d8d-c359-47b8-b5d3-31dcdaf86551 1Gi RWO Delete Bound default/ceph-block-pvc rook-ceph-block | |

[root@k8s-master01 ~]# cat ceph-block-pvc-pod.yaml #挂载 PVC 测试 | |

apiVersion: v1 | |

kind: Pod | |

metadata: | |

name: ceph-block-pvc-pod | |

spec: | |

containers: | |

- name: ceph-block-pvc-pod | |

image: nginx | |

volumeMounts: | |

- name: nginx-page | |

mountPath: /usr/share/nginx/html | |

volumes: | |

- name: nginx-page | |

persistentVolumeClaim: | |

claimName: ceph-block-pv | |

[root@k8s-master01 ~]# kubectl apply -f ceph-block-pvc-pod.yaml |

# 7.3 StatefulSet volumeClaimTemplates

[root@k8s-master01 ~]# cat ceph-block-pvc-sts.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: nginx | |

labels: | |

app: nginx | |

spec: | |

ports: | |

- port: 80 | |

name: web | |

clusterIP: None | |

selector: | |

app: nginx | |

--- | |

apiVersion: apps/v1 | |

kind: StatefulSet | |

metadata: | |

name: web | |

spec: | |

selector: | |

matchLabels: | |

app: nginx # 必须匹配 .spec.template.metadata.labels | |

serviceName: "nginx" | |

replicas: 3 # 默认值是 1 | |

template: | |

metadata: | |

labels: | |

app: nginx # 必须匹配 .spec.selector.matchLabels | |

spec: | |

containers: | |

- name: nginx | |

image: nginx:1.20 | |

ports: | |

- containerPort: 80 | |

name: web | |

volumeMounts: | |

- name: www | |

mountPath: /usr/share/nginx/html | |

volumeClaimTemplates: | |

- metadata: | |

name: www | |

spec: | |

accessModes: [ "ReadWriteOnce" ] | |

storageClassName: "rook-ceph-block" | |

resources: | |

requests: | |

storage: 1Gi | |

[root@k8s-master01 ~]# kubectl apply -f ceph-block-pvc-sts.yaml | |

[root@k8s-master01 ~]# kubectl get pods | |

NAME READY STATUS RESTARTS AGE | |

web-0 1/1 Running 0 4m19s | |

web-1 1/1 Running 0 4m10s | |

web-2 1/1 Running 0 2m21s | |

[root@k8s-master01 ~]# kubectl get pvc | |

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE | |

www-web-0 Bound pvc-27cab5bf-f989-4050-aa84-1b2dac9fa745 1Gi RWO rook-ceph-block 4m23s | |

www-web-1 Bound pvc-76fb08f4-2195-4678-b6b8-286c2f722cc9 1Gi RWO rook-ceph-block 4m14s | |

www-web-2 Bound pvc-6b858cd9-288f-48bc-bc96-33e6eb519613 1Gi RWO rook-ceph-block 2m25s | |

[root@k8s-master01 ~]# kubectl get pv | |

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE | |

pvc-27cab5bf-f989-4050-aa84-1b2dac9fa745 1Gi RWO Delete Bound default/www-web-0 rook-ceph-block 4m25s | |

pvc-6b858cd9-288f-48bc-bc96-33e6eb519613 1Gi RWO Delete Bound default/www-web-2 rook-ceph-block 2m27s | |

pvc-76fb08f4-2195-4678-b6b8-286c2f722cc9 1Gi RWO Delete Bound default/www-web-1 rook-ceph-block 4m16s |

# 8. 共享文件系统的使用

共享文件系统一般用于多个 Pod 共享一个存储

# 8.1 创建共享类型的文件系统

[root@k8s-master01 ~]# cd /root/rook/deploy/examples/ | |

[root@k8s-master01 examples]# kubectl apply -f filesystem.yaml | |

[root@k8s-master01 examples]# kubectl get pod -l app=rook-ceph-mds -n rook-ceph | |

NAME READY STATUS RESTARTS AGE | |

rook-ceph-mds-myfs-a-7d76cb5988-9nz9p 2/2 Running 0 36s | |

rook-ceph-mds-myfs-b-76ff7c784c-vs8nm 2/2 Running 0 33s |

# 8.2 创建共享类型文件系统的 StorageClass

[root@k8s-master01 examples]# cd csi/cephfs | |

[root@k8s-master01 cephfs]# kubectl create -f storageclass.yaml | |

[root@k8s-master01 cephfs]# kubectl get sc | |

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE | |

nfs-storage nfzl.com/nfs Delete Immediate false 17h | |

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 82m | |

rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 13s |

# 8.3 挂载测试

[root@k8s-master01 ~]# cat cephfs-pvc-deploy.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: nginx | |

labels: | |

app: nginx | |

spec: | |

ports: | |

- port: 80 | |

name: web | |

selector: | |

app: nginx | |

type: ClusterIP | |

--- | |

kind: PersistentVolumeClaim | |

apiVersion: v1 | |

metadata: | |

name: nginx-share-pvc | |

spec: | |

storageClassName: rook-cephfs | |

accessModes: | |

- ReadWriteMany | |

resources: | |

requests: | |

storage: 2Gi | |

--- | |

apiVersion: apps/v1 | |

kind: Deployment | |

metadata: | |

name: web | |

spec: | |

selector: | |

matchLabels: | |

app: nginx # has to match .spec.template.metadata.labels | |

replicas: 3 # by default is 1 | |

template: | |

metadata: | |

labels: | |

app: nginx # has to match .spec.selector.matchLabels | |

spec: | |

containers: | |

- name: nginx | |

image: nginx | |

imagePullPolicy: IfNotPresent | |

ports: | |

- containerPort: 80 | |

name: web | |

volumeMounts: | |

- name: www | |

mountPath: /usr/share/nginx/html | |

volumes: | |

- name: www | |

persistentVolumeClaim: | |

claimName: nginx-share-pvc | |

[root@k8s-master01 ~]# kubectl apply -f cephfs-pvc-deploy.yaml | |

[root@k8s-master01 ~]# kubectl get pods | |

NAME READY STATUS RESTARTS AGE | |

cluster-test-84dfc9c68b-5q4ng 1/1 Running 84 (4m2s ago) 16d | |

nfs-client-provisioner-5dbbd8d796-lhdgw 1/1 Running 5 (123m ago) 18h | |

web-6c59f8559-g5xzb 1/1 Running 0 46s | |

web-6c59f8559-ns77q 1/1 Running 0 46s | |

web-6c59f8559-qxb5f 1/1 Running 0 46s | |

[root@k8s-master01 ~]# kubectl get pvc | |

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE | |

nginx-share-pvc Bound pvc-4de733fe-c2fb-437b-baff-aaeba0235d54 2Gi RWX rook-cephfs 52s | |

[root@k8s-master01 ~]# kubectl get pv | |

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE | |

pvc-4de733fe-c2fb-437b-baff-aaeba0235d54 2Gi RWX Delete Bound default/nginx-share-pvc rook-cephfs 53s | |

[root@k8s-master01 ~]# kubectl exec -it web-6c59f8559-g5xzb -- bash | |

root@web-6c59f8559-g5xzb:/# cd /usr/share/nginx/html/ | |

root@web-6c59f8559-g5xzb:/usr/share/nginx/html# echo "hello cephfs" >> index.html | |

[root@k8s-master01 ~]# kubectl get svc | |

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE | |

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16d | |

mysql-svc-external ClusterIP None <none> 3306/TCP 9d | |

nginx ClusterIP 10.96.58.17 <none> 80/TCP 4m34s | |

[root@k8s-master01 ~]# curl 10.96.58.17 | |

hello cephfs |

# 9.PVC 扩容

[root@k8s-master01 ~]# kubectl get sc | |

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE | |

nfs-storage nfzl.com/nfs Delete Immediate false 18h | |

rook-ceph-block rook-ceph.rbd.csi.ceph.com Delete Immediate true 104m #true 允许扩容 | |

rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 22m #true 允许扩容 | |

[root@k8s-master01 ~]# kubectl get pvc | |

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE | |

nginx-share-pvc Bound pvc-4de733fe-c2fb-437b-baff-aaeba0235d54 2Gi RWX rook-cephfs 13m | |

[root@k8s-master01 ~]# kubectl edit pvc nginx-share-pvc | |

... | |

- ReadWriteMany | |

resources: | |

requests: | |

storage: 5Gi #更改 pvc 大小 | |

storageClassName: rook-cephfs | |

... | |

[root@k8s-master01 ~]# kubectl get pvc #查看 PVC 是否扩容 | |

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE | |

nginx-share-pvc Bound pvc-4de733fe-c2fb-437b-baff-aaeba0235d54 5Gi RWX rook-cephfs 15m | |

[root@k8s-master01 ~]# kubectl get pv #查看 PV 是否扩容 | |

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE | |

pvc-4de733fe-c2fb-437b-baff-aaeba0235d54 5Gi RWX Delete Bound default/nginx-share-pvc rook-cephfs 15m | |

[root@k8s-master01 ~]# kubectl exec -it web-6c59f8559-g5xzb -- bash #进入容器,查看 pod 是否扩容 | |

root@web-6c59f8559-g5xzb:/# df -h | |

Filesystem Size Used Avail Use% Mounted on | |

overlay 17G 13G 4.1G 76% / | |

tmpfs 64M 0 64M 0% /dev | |

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup | |

/dev/sda3 17G 13G 4.1G 76% /etc/hosts | |

shm 64M 0 64M 0% /dev/shm | |

10.96.121.140:6789,10.96.131.130:6789,10.96.62.64:6789:/volumes/csi/csi-vol-3b645a11-58f4-475a-9404-5d84964f5291/e4bdf743-eb18-42c8-b04f-41964f76de4f 5.0G 0 5.0G 0% /usr/share/nginx/html | |

tmpfs 3.8G 12K 3.8G 1% /run/secrets/kubernetes.io/serviceaccount | |

tmpfs 2.0G 0 2.0G 0% /proc/asound | |

tmpfs 2.0G 0 2.0G 0% /proc/acpi | |

tmpfs 2.0G 0 2.0G 0% /proc/scsi | |

tmpfs 2.0G 0 2.0G 0% /sys/firmware |

# 10. PVC 快照

# 10.1 文件共享类型快照

[root@k8s-master01 ~]# cd rook/deploy/examples | |

[root@k8s-master01 examples]# kubectl create -f csi/cephfs/snapshotclass.yaml | |

[root@k8s-master01 examples]# kubectl get volumesnapshotclass | |

NAME DRIVER DELETIONPOLICY AGE | |

csi-cephfsplugin-snapclass rook-ceph.cephfs.csi.ceph.com Delete 25s | |

#拍摄快照 | |

[root@k8s-master01 examples]# kubectl exec -it web-6c59f8559-g5xzb -- bash #pvc 新增数据 | |

root@web-6c59f8559-g5xzb:/# cd /usr/share/nginx/html/ | |

root@web-6c59f8559-g5xzb:/usr/share/nginx/html# touch {1..10} | |

root@web-6c59f8559-g5xzb:/usr/share/nginx/html# ls | |

1 10 2 3 4 5 6 7 8 9 index.html | |

[root@k8s-master01 examples]# kubectl get pvc #查看 pvs 并对 nginx-share-pvc 拍摄快照 | |

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE | |

nginx-share-pvc Bound pvc-4de733fe-c2fb-437b-baff-aaeba0235d54 5Gi RWX rook-cephfs 4h23m | |

[root@k8s-master01 examples]# cat csi/cephfs/snapshot.yaml #拍摄快照 | |

--- | |

# 1.17 <= K8s <= v1.19 | |

# apiVersion: snapshot.storage.k8s.io/v1beta1 | |

# K8s >= v1.20 | |

apiVersion: snapshot.storage.k8s.io/v1 | |

kind: VolumeSnapshot | |

metadata: | |

name: cephfs-pvc-snapshot | |

spec: | |

volumeSnapshotClassName: csi-cephfsplugin-snapclass | |

source: | |

persistentVolumeClaimName: nginx-share-pvc #基于那个 PVC 拍摄快照 | |

[root@k8s-master01 examples]# kubectl apply -f csi/cephfs/snapshot.yaml | |

[root@k8s-master01 examples]# kubectl get volumesnapshot | |

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE | |

cephfs-pvc-snapshot true nginx-share-pvc 5Gi csi-cephfsplugin-snapclass snapcontent-bdaddb97-debe-4f42-9423-13bf1c5b5402 4m6s 4m8s | |

#删除 pvc 数据 | |

[root@k8s-master01 examples]# kubectl exec -it web-6c59f8559-g5xzb -- bash | |

root@web-6c59f8559-g5xzb:/# cd /usr/share/nginx/html/ | |

root@web-6c59f8559-g5xzb:/usr/share/nginx/html# ls | |

1 10 2 3 4 5 6 7 8 9 index.html | |

root@web-6c59f8559-g5xzb:/usr/share/nginx/html# rm -rf {1..10} | |

root@web-6c59f8559-g5xzb:/usr/share/nginx/html# ls | |

index.html | |

#pvc 回滚数据 | |

[root@k8s-master01 examples]# kubectl get volumesnapshot | |

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE | |

cephfs-pvc-snapshot true nginx-share-pvc 5Gi csi-cephfsplugin-snapclass snapcontent-bdaddb97-debe-4f42-9423-13bf1c5b5402 7m39s 7m41s | |

[root@k8s-master01 examples]# cat csi/cephfs/pvc-restore.yaml | |

--- | |

apiVersion: v1 | |

kind: PersistentVolumeClaim | |

metadata: | |

name: cephfs-pvc-restore | |

spec: | |

storageClassName: rook-cephfs #创建 pv 的 storageclass 名称相同 | |

dataSource: | |

name: cephfs-pvc-snapshot #volumesnapshot 数据源 | |

kind: VolumeSnapshot | |

apiGroup: snapshot.storage.k8s.io | |

accessModes: | |

- ReadWriteMany | |

resources: | |

requests: | |

storage: 5Gi #大小等于 snapshot 大小 | |

[root@k8s-master01 examples]# kubectl apply -f csi/cephfs/pvc-restore.yaml | |

[root@k8s-master01 examples]# kubectl get pvc | |

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE | |

cephfs-pvc-restore Bound pvc-9e845f2b-df1f-450d-8aa2-f9a46db6adb6 5Gi RWX rook-cephfs 54s | |

nginx-share-pvc Bound pvc-4de733fe-c2fb-437b-baff-aaeba0235d54 5Gi RWX rook-cephfs 4h50m | |

#挂载 PVC 测试数据是否恢复 | |

[root@k8s-master01 examples]# cat csi/cephfs/pod.yaml | |

--- | |

apiVersion: v1 | |

kind: Pod | |

metadata: | |

name: csicephfs-demo-pod | |

spec: | |

containers: | |

- name: web-server | |

image: nginx | |

volumeMounts: | |

- name: mypvc | |

mountPath: /var/lib/www/html | |

volumes: | |

- name: mypvc | |

persistentVolumeClaim: | |

claimName: cephfs-pvc-restore #挂载恢复 pvc | |

readOnly: false | |

[root@k8s-master01 examples]# kubectl apply -f csi/cephfs/pod.yaml | |

[root@k8s-master01 examples]# kubectl get pods | |

NAME READY STATUS RESTARTS AGE | |

cluster-test-84dfc9c68b-5q4ng 1/1 Running 88 (57m ago) 16d | |

csicephfs-demo-pod 1/1 Running 0 24s | |

nfs-client-provisioner-5dbbd8d796-lhdgw 1/1 Running 5 (6h57m ago) 23h | |

web-6c59f8559-g5xzb 1/1 Running 0 4h54m | |

web-6c59f8559-ns77q 1/1 Running 0 4h54m | |

web-6c59f8559-qxb5f 1/1 Running 0 4h54m | |

[root@k8s-master01 examples]# kubectl exec -it csicephfs-demo-pod -- bash | |

root@csicephfs-demo-pod:/# ls /var/lib/www/html/ #s 删除数据已经恢复 | |

1 10 2 3 4 5 6 7 8 9 index.html |

# 10.2 PVC 克隆

[root@k8s-master01 examples]# kubectl get pvc | |

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE | |

cephfs-pvc-restore Bound pvc-9e845f2b-df1f-450d-8aa2-f9a46db6adb6 5Gi RWX rook-cephfs 11m | |

nginx-share-pvc Bound pvc-4de733fe-c2fb-437b-baff-aaeba0235d54 5Gi RWX rook-cephfs 5h1m | |

[root@k8s-master01 examples]# cat csi/cephfs/pvc-clone.yaml | |

--- | |

apiVersion: v1 | |

kind: PersistentVolumeClaim | |

metadata: | |

name: cephfs-pvc-clone | |

spec: | |

storageClassName: rook-cephfs # pvc 的 storageClass 名称 | |

dataSource: | |

name: nginx-share-pvc #克隆的 PVC 名称 | |

kind: PersistentVolumeClaim | |

accessModes: | |

- ReadWriteMany | |

resources: | |

requests: | |

storage: 5Gi #大小等于所克隆的 PVC 大小 | |

[root@k8s-master01 examples]# kubectl apply -f csi/cephfs/pvc-clone.yaml | |

[root@k8s-master01 examples]# kubectl get pvc | |

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE | |

cephfs-pvc-clone Bound pvc-0a19b65e-cb5e-4379-a7f7-e0783fcf8ddf 5Gi RWX rook-cephfs 22s | |

cephfs-pvc-restore Bound pvc-9e845f2b-df1f-450d-8aa2-f9a46db6adb6 5Gi RWX rook-cephfs 15m | |

nginx-share-pvc Bound pvc-4de733fe-c2fb-437b-baff-aaeba0235d54 5Gi RWX rook-cephfs 5h4m | |

#挂载克隆 PVC 测试 | |

[root@k8s-master01 examples]# cat csi/cephfs/pod.yaml | |

--- | |

apiVersion: v1 | |

kind: Pod | |

metadata: | |

name: csicephfs-demo-pod | |

spec: | |

containers: | |

- name: web-server | |

image: nginx | |

volumeMounts: | |

- name: mypvc | |

mountPath: /var/lib/www/html | |

volumes: | |

- name: mypvc | |

persistentVolumeClaim: | |

claimName: cephfs-pvc-clone #挂载克隆的 pvc | |

readOnly: false | |

[root@k8s-master01 examples]# kubectl apply -f csi/cephfs/pod.yaml | |

[root@k8s-master01 examples]# kubectl get pods | |

NAME READY STATUS RESTARTS AGE | |

cluster-test-84dfc9c68b-5q4ng 1/1 Running 89 (9m54s ago) 16d | |

csicephfs-demo-pod 1/1 Running 0 17s | |

nfs-client-provisioner-5dbbd8d796-lhdgw 1/1 Running 5 (7h9m ago) 23h | |

web-6c59f8559-g5xzb 1/1 Running 0 5h6m | |

web-6c59f8559-ns77q 1/1 Running 0 5h6m | |

web-6c59f8559-qxb5f 1/1 Running 0 5h6m | |

[root@k8s-master01 examples]# kubectl exec -it csicephfs-demo-pod -- bash | |

root@csicephfs-demo-pod:/# cat /var/lib/www/html/index.html | |

hello cephfs |

# 11. 测试数据清理

参考文档:https://rook.io/docs/rook/v1.11/Getting-Started/ceph-teardown/#delete-the-cephcluster-crd | |

[root@k8s-master01 ~]# kubectl delete deploy web | |

[root@k8s-master01 ~]# kubectl delete pods csicephfs-demo-pod | |

[root@k8s-master01 ~]# kubectl delete pvc --all | |

[root@k8s-master01 ~]# kubectl get pvc | |

No resources found in default namespace. | |

[root@k8s-master01 ~]# kubectl get pv | |

No resources found | |

[root@k8s-master01 ~]# kubectl get volumesnapshot | |

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE | |

cephfs-pvc-snapshot true nginx-share-pvc 5Gi csi-cephfsplugin-snapclass snapcontent-bdaddb97-debe-4f42-9423-13bf1c5b5402 61m 61m | |

[root@k8s-master01 ~]# kubectl delete volumesnapshot cephfs-pvc-snapshot | |

volumesnapshot.snapshot.storage.k8s.io "cephfs-pvc-snapshot" deleted | |

kubectl delete -n rook-ceph cephblockpool replicapool | |

kubectl delete -n rook-ceph cephfilesystem myfs | |

kubectl delete storageclass rook-ceph-block | |

kubectl delete storageclass rook-cephfs | |

kubectl delete -f csi/cephfs/kube-registry.yaml | |

kubectl delete storageclass csi-cephfs | |

kubectl -n rook-ceph delete cephcluster rook-ceph | |

kubectl delete -f operator.yaml | |

kubectl delete -f common.yaml | |

kubectl delete -f crds.yaml |