# 消费租赁系统微服务应用交付实践

# 一、部署中间件

# 1.1 部署 MySQL

# 1.1.1 MySQL-ConfigMap

[root@k8s-master01 01-nf-flms-mysql]# cat 01-mysql-cm.yaml | |

apiVersion: v1 | |

kind: ConfigMap | |

metadata: | |

name: mysql-cm | |

namespace: prod | |

data: | |

my.cnf: |- | |

[mysqld] | |

#performance setttings | |

lock_wait_timeout = 3600 | |

open_files_limit = 65535 | |

back_log = 1024 | |

max_connections = 1024 | |

max_connect_errors = 1000000 | |

table_open_cache = 1024 | |

table_definition_cache = 1024 | |

thread_stack = 512K | |

sort_buffer_size = 4M | |

join_buffer_size = 4M | |

read_buffer_size = 8M | |

read_rnd_buffer_size = 4M | |

bulk_insert_buffer_size = 64M | |

thread_cache_size = 768 | |

interactive_timeout = 600 | |

wait_timeout = 600 | |

tmp_table_size = 32M | |

max_heap_table_size = 32M | |

max_allowed_packet = 128M |

# 1.1.2 MySQL-Secret

[root@k8s-master01 01-nf-flms-mysql]# cat 02-mysql-secret.yaml | |

apiVersion: v1 | |

kind: Secret | |

metadata: | |

name: mysql-secret | |

namespace: prod | |

stringData: | |

MYSQL_ROOT_PASSWORD: Superman*2023 | |

type: Opaque |

# 1.1.3 MySQL-StatefulSet

# cat 03-mysql-sts.yaml | |

apiVersion: apps/v1 | |

kind: StatefulSet | |

metadata: | |

name: mysql-nf-flms | |

namespace: prod | |

spec: | |

serviceName: "mysql-nf-flms-svc" | |

replicas: 1 | |

selector: | |

matchLabels: | |

app: mysql | |

role: nf-flms | |

template: | |

metadata: | |

labels: | |

app: mysql | |

role: nf-flms | |

spec: | |

containers: | |

- name: db | |

image: mysql:8.0 | |

args: | |

- "--character-set-server=utf8" | |

env: | |

- name: MYSQL_ROOT_PASSWORD | |

valueFrom: | |

secretKeyRef: | |

name: mysql-secret | |

key: MYSQL_ROOT_PASSWORD | |

- name: MYSQL_DATABASE #数据库名称 | |

value: nf-flms | |

ports: | |

- name: tcp-3306 | |

containerPort: 3306 | |

protocol: TCP | |

livenessProbe: | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 30 | |

successThreshold: 1 | |

tcpSocket: | |

port: 3306 | |

timeoutSeconds: 2 | |

readinessProbe: | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 30 | |

successThreshold: 1 | |

tcpSocket: | |

port: 3306 | |

timeoutSeconds: 2 | |

resources: | |

limits: | |

cpu: 2000m | |

memory: 4000Mi | |

requests: | |

cpu: 200m | |

memory: 500Mi | |

volumeMounts: | |

- name: data | |

mountPath: /var/lib/mysql/ | |

- name: config | |

mountPath: /etc/mysql/conf.d/my.cnf | |

subPath: my.cnf | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

volumes: | |

- name: config | |

configMap: | |

name: mysql-cm | |

items: | |

- key: my.cnf | |

path: my.cnf | |

defaultMode: 420 | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

volumeClaimTemplates: | |

- metadata: | |

name: data | |

spec: | |

storageClassName: "nfs-storage" | |

accessModes: [ "ReadWriteOnce" ] | |

resources: | |

requests: | |

storage: 5Gi |

# 1.1.4 MySQL Service

# cat 04-mysql-nf-flms-svc.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: mysql-nf-flms-svc | |

namespace: prod | |

spec: | |

clusterIP: None | |

selector: | |

app: mysql | |

role: nf-flms | |

ports: | |

- name: tcp-mysql-svc | |

protocol: TCP | |

port: 3306 | |

targetPort: 3306 | |

--- | |

kind: Service | |

apiVersion: v1 | |

metadata: | |

name: mysql-nf-flms-svc-balance | |

namespace: prod | |

spec: | |

selector: | |

app: mysql | |

role: nf-flms | |

ports: | |

- name: tcp-mysql-balance | |

protocol: TCP | |

port: 3306 | |

targetPort: 3306 | |

nodePort: 32206 | |

type: NodePort |

# 1.1.5 更新资源清单

[root@k8s-master01 01-nf-flms-mysql]# sed -i "s#dev#prod#g" *.yaml | |

[root@k8s-master01 01-nf-flms-mysql]# kubectl create ns prod | |

[root@k8s-master01 01-nf-flms-mysql]# kubectl apply -f . |

# 1.1.6 导入数据库

[root@k8s-master01 ~]# dig @10.96.0.10 mysql-nf-flms-0.mysql-nf-flms-svc.prod.svc.cluster.local +short | |

172.16.85.231 | |

[root@k8s-master01 ~]# mysql -h 172.16.85.231 -uroot -p"Superman*2023" -B nf_flms < 202503310038/sggyl_nf_flms_202503310038.sql |

# 1.1.7 连接外部数据库示例

[root@k8s-master01 01-nf-flms-mysql]# cat 05-mysql-nf-flms-svc-external.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

labels: | |

app: mysql-nf-flms-svc-external | |

name: mysql-nf-flms-svc-external | |

namespace: prod | |

spec: | |

clusterIP: None | |

ports: | |

- name: mysql | |

port: 3306 | |

protocol: TCP | |

targetPort: 3306 | |

type: ClusterIP | |

--- | |

apiVersion: v1 | |

kind: Endpoints | |

metadata: | |

labels: | |

app: mysql-nf-flms-svc-external | |

name: mysql-nf-flms-svc-external | |

namespace: prod | |

subsets: | |

- addresses: | |

- ip: 192.168.1.68 | |

ports: | |

- name: mysql | |

port: 3306 | |

protocol: TCP | |

[root@k8s-master01 01-nf-flms-mysql]# dig @10.96.0.10 mysql-nf-flms-svc-external.prod.svc.cluster.local +short | |

192.168.1.68 |

# 1.2 部署 Redis-single

# 1.2.1 Redis-ConfigMap

# cat 01-redis-cm.yaml | |

apiVersion: v1 | |

kind: ConfigMap | |

metadata: | |

name: redis-conf | |

namespace: prod | |

data: | |

redis.conf: | | |

bind 0.0.0.0 | |

appendonly yes | |

protected-mode no | |

dir /data | |

port 6379 | |

requirepass Superman*2023 |

# 1.2.2 Redis-StatefulSet

# cat 02-redis-sts.yaml | |

apiVersion: apps/v1 | |

kind: StatefulSet | |

metadata: | |

name: redis | |

namespace: prod | |

spec: | |

serviceName: redis-svc | |

replicas: 1 | |

selector: | |

matchLabels: | |

app: redis | |

template: | |

metadata: | |

labels: | |

app: redis | |

spec: | |

containers: | |

- name: redis | |

image: redis:6.2.7 | |

command: | |

- "redis-server" | |

args: | |

- "/etc/redis/redis.conf" | |

ports: | |

- name: redis-6379 | |

containerPort: 6379 | |

protocol: TCP | |

livenessProbe: | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 30 | |

successThreshold: 1 | |

tcpSocket: | |

port: 6379 | |

timeoutSeconds: 2 | |

readinessProbe: | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 30 | |

successThreshold: 1 | |

tcpSocket: | |

port: 6379 | |

volumeMounts: | |

- name: config | |

mountPath: /etc/redis | |

- name: data | |

mountPath: /data | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

resources: | |

limits: | |

cpu: '2' | |

memory: 4000Mi | |

requests: | |

cpu: 100m | |

memory: 500Mi | |

volumes: | |

- name: config | |

configMap: | |

name: redis-conf | |

items: | |

- key: redis.conf | |

path: redis.conf | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

volumeClaimTemplates: | |

- metadata: | |

name: data | |

spec: | |

accessModes: [ "ReadWriteOnce" ] | |

storageClassName: "nfs-storage" | |

resources: | |

requests: | |

storage: 2Gi |

# 1.2.3 Redis-Service

# cat 03-redis-svc.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: redis-svc | |

namespace: prod | |

labels: | |

app: redis | |

spec: | |

ports: | |

- name: redis-6379 | |

protocol: TCP | |

port: 6379 | |

targetPort: 6379 | |

selector: | |

app: redis | |

clusterIP: None |

# 1.2.4 更新资源清单

[root@k8s-master01 02-redis]# sed -i "s#dev#prod#g" *.yaml | |

[root@k8s-master01 02-redis]# kubectl apply -f . |

# 1.4 部署 Nacos 集群

# 1.4.1 部署 Nacos-MySQL

# cat 01-mysql-nacos-sts-svc.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: mysql-nacos-svc | |

namespace: prod | |

spec: | |

clusterIP: None | |

selector: | |

app: mysql | |

role: nacos | |

ports: | |

- port: 3306 | |

targetPort: 3306 | |

--- | |

kind: Service | |

apiVersion: v1 | |

metadata: | |

name: mysql-nacos-balance | |

namespace: prod | |

spec: | |

selector: | |

app: mysql | |

role: nacos | |

ports: | |

- name: tcp-mysql-balance | |

protocol: TCP | |

port: 3306 | |

targetPort: 3306 | |

nodePort: 31106 | |

type: NodePort | |

--- | |

apiVersion: apps/v1 | |

kind: StatefulSet | |

metadata: | |

name: mysql-nacos | |

namespace: prod | |

spec: | |

serviceName: "mysql-nacos-svc" | |

replicas: 1 | |

selector: | |

matchLabels: | |

app: mysql | |

role: nacos | |

template: | |

metadata: | |

labels: | |

app: mysql | |

role: nacos | |

spec: | |

containers: | |

- name: db | |

image: mysql:8.0 | |

args: | |

- "--character-set-server=utf8" | |

env: | |

- name: MYSQL_ROOT_PASSWORD | |

value: Superman*2023 | |

- name: MYSQL_DATABASE #nacos 库名称 | |

value: nacos | |

ports: | |

- containerPort: 3306 | |

resources: | |

limits: | |

cpu: '2' | |

memory: 4000Mi | |

requests: | |

cpu: 100m | |

memory: 500Mi | |

livenessProbe: | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 30 | |

successThreshold: 1 | |

tcpSocket: | |

port: 3306 | |

timeoutSeconds: 2 | |

readinessProbe: | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 30 | |

successThreshold: 1 | |

tcpSocket: | |

port: 3306 | |

volumeMounts: | |

- name: data | |

mountPath: /var/lib/mysql/ | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

volumes: | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

volumeClaimTemplates: | |

- metadata: | |

name: data | |

spec: | |

accessModes: ["ReadWriteMany"] | |

storageClassName: "nfs-storage" | |

resources: | |

requests: | |

storage: 5Gi |

# 1.4.2 导入数据库

nacos 下载地址:https://nacos.io/download/release-history/?spm=5238cd80.7a4232a8.0.0.f834e7559caaaK

[root@k8s-master01 03-nacos]# sed -i "s#dev#prod#g" *.yaml | |

[root@k8s-master01 03-nacos]# kubectl apply -f 01-mysql-nacos-sts-svc.yaml | |

[root@k8s-master01 05-xxl-job]# dig @10.96.0.10 mysql-nacos-svc.prod.svc.cluster.local +short | |

172.16.32.159 | |

[root@k8s-master01 03-nacos]# mysql -h 172.16.32.159 -uroot -p"Superman*2023" -B nacos < nacos/conf/mysql-schema.sql | |

[root@k8s-master01 ~]# mysql -h 172.16.32.159 -uroot -p"Superman*2023" -B nacos < sggyl_nf_nacos_202505210038.sql |

# 1.4.3 部署 Nacos-ConfigMap

# cat 02-nacos-configmap.yaml | |

apiVersion: v1 | |

kind: ConfigMap | |

metadata: | |

name: nacos-cm | |

namespace: prod | |

data: | |

mysql.host: "mysql-nacos-svc.prod.svc.cluster.local" | |

mysql.db.name: "nacos" #nacos 数据库名称 | |

mysql.port: "3306" | |

mysql.user: "root" #nacos 数据库用户名 | |

mysql.password: "Superman*2023" #nacos 数据库密码 |

# 1.4.4 部署 Nacos-Service-StatefulSet

1. 开启鉴权

# cat 03-nacos-sts-deploy-svc.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: nacos-svc | |

namespace: prod | |

spec: | |

clusterIP: None | |

selector: | |

app: nacos | |

ports: | |

- name: server | |

port: 8848 | |

targetPort: 8848 | |

- name: client-rpc | |

port: 9848 | |

targetPort: 9848 | |

- name: raft-rpc | |

port: 9849 | |

targetPort: 9849 | |

- name: old-raft-rpc | |

port: 7848 | |

targetPort: 7848 | |

--- | |

apiVersion: apps/v1 | |

kind: StatefulSet | |

metadata: | |

name: nacos | |

namespace: prod | |

spec: | |

serviceName: "nacos-svc" | |

replicas: 3 | |

selector: | |

matchLabels: | |

app: nacos | |

template: | |

metadata: | |

labels: | |

app: nacos | |

spec: | |

affinity: # 避免 Pod 运行到同一个节点上了 | |

podAntiAffinity: | |

requiredDuringSchedulingIgnoredDuringExecution: | |

- labelSelector: | |

matchExpressions: | |

- key: app | |

operator: In | |

values: ["nacos"] | |

topologyKey: "kubernetes.io/hostname" | |

initContainers: | |

- name: peer-finder-plugin-install | |

image: nacos/nacos-peer-finder-plugin:1.1 | |

imagePullPolicy: IfNotPresent | |

volumeMounts: | |

- name: data | |

mountPath: /home/nacos/plugins/peer-finder | |

subPath: peer-finder | |

containers: | |

- name: nacos | |

image: nacos/nacos-server:v2.4.3 | |

resources: | |

limits: | |

cpu: '2' | |

memory: 4Gi | |

requests: | |

cpu: "100m" | |

memory: "1Gi" | |

ports: | |

- name: client-port | |

containerPort: 8848 | |

- name: client-rpc | |

containerPort: 9848 | |

- name: raft-rpc | |

containerPort: 9849 | |

- name: old-raft-rpc | |

containerPort: 7848 | |

env: | |

- name: NACOS_REPLICAS | |

value: "3" | |

- name: SERVICE_NAME | |

value: "nacos-svc" | |

- name: DOMAIN_NAME | |

value: "cluster.local" | |

- name: POD_NAMESPACE | |

valueFrom: | |

fieldRef: | |

apiVersion: v1 | |

fieldPath: metadata.namespace | |

- name: MYSQL_SERVICE_HOST | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.host | |

- name: MYSQL_SERVICE_DB_NAME | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.db.name | |

- name: MYSQL_SERVICE_PORT | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.port | |

- name: MYSQL_SERVICE_USER | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.user | |

- name: MYSQL_SERVICE_PASSWORD | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.password | |

- name: SPRING_DATASOURCE_PLATFORM | |

value: "mysql" | |

- name: NACOS_SERVER_PORT | |

value: "8848" | |

- name: NACOS_APPLICATION_PORT | |

value: "8848" | |

- name: PREFER_HOST_MODE | |

value: "hostname" | |

- name: NACOS_AUTH_ENABLE | |

value: "true" | |

- name: NACOS_AUTH_IDENTITY_KEY | |

value: "nacosAuthKey" | |

- name: NACOS_AUTH_IDENTITY_VALUE | |

value: "nacosSecurtyValue" | |

- name: NACOS_AUTH_TOKEN | |

value: "SecretKey012345678901234567890123456789012345678901234567890123456789" | |

- name: NACOS_AUTH_TOKEN_EXPIRE_SECONDS | |

value: "18000" | |

volumeMounts: | |

- name: data | |

mountPath: /home/nacos/plugins/peer-finder | |

subPath: peer-finder | |

- name: data | |

mountPath: /home/nacos/data | |

subPath: data | |

- name: data | |

mountPath: /home/nacos/logs | |

subPath: logs | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

volumes: | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

volumeClaimTemplates: | |

- metadata: | |

name: data | |

spec: | |

storageClassName: "nfs-storage" | |

accessModes: ["ReadWriteMany"] | |

resources: | |

requests: | |

storage: 5Gi |

2. 关闭鉴权

# cat 03-nacos-sts-deploy-svc.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: nacos-svc | |

namespace: prod | |

spec: | |

clusterIP: None | |

selector: | |

app: nacos | |

ports: | |

- name: server | |

port: 8848 | |

targetPort: 8848 | |

- name: client-rpc | |

port: 9848 | |

targetPort: 9848 | |

- name: raft-rpc | |

port: 9849 | |

targetPort: 9849 | |

- name: old-raft-rpc | |

port: 7848 | |

targetPort: 7848 | |

--- | |

apiVersion: apps/v1 | |

kind: StatefulSet | |

metadata: | |

name: nacos | |

namespace: prod | |

spec: | |

serviceName: "nacos-svc" | |

replicas: 3 | |

selector: | |

matchLabels: | |

app: nacos | |

template: | |

metadata: | |

labels: | |

app: nacos | |

spec: | |

affinity: # 避免 Pod 运行到同一个节点上了 | |

podAntiAffinity: | |

requiredDuringSchedulingIgnoredDuringExecution: | |

- labelSelector: | |

matchExpressions: | |

- key: app | |

operator: In | |

values: ["nacos"] | |

topologyKey: "kubernetes.io/hostname" | |

initContainers: | |

- name: peer-finder-plugin-install | |

image: nacos/nacos-peer-finder-plugin:1.1 | |

imagePullPolicy: Always | |

volumeMounts: | |

- name: data | |

mountPath: /home/nacos/plugins/peer-finder | |

subPath: peer-finder | |

containers: | |

- name: nacos | |

image: nacos/nacos-server:v2.4.3 | |

resources: | |

limits: | |

cpu: '2' | |

memory: 4Gi | |

requests: | |

cpu: "100m" | |

memory: "1Gi" | |

ports: | |

- name: client-port | |

containerPort: 8848 | |

- name: client-rpc | |

containerPort: 9848 | |

- name: raft-rpc | |

containerPort: 9849 | |

- name: old-raft-rpc | |

containerPort: 7848 | |

env: | |

- name: NACOS_AUTH_ENABLE | |

value: "false" | |

- name: MODE | |

value: "cluster" | |

- name: NACOS_SERVERS | |

value: "nacos-0.nacos-svc.prod.svc.cluster.local:8848 nacos-1.nacos-svc.prod.svc.cluster.local:8848 nacos-2.nacos-svc.prod.svc.cluster.local:8848" | |

- name: NACOS_VERSION | |

value: 2.4.3 | |

- name: SPRING_DATASOURCE_PLATFORM | |

value: "mysql" | |

- name: NACOS_REPLICAS | |

value: "3" | |

- name: SERVICE_NAME | |

value: "nacos-svc" | |

- name: DOMAIN_NAME | |

value: "cluster.local" | |

- name: NACOS_SERVER_PORT | |

value: "8848" | |

- name: NACOS_APPLICATION_PORT | |

value: "8848" | |

- name: PREFER_HOST_MODE | |

value: "hostname" | |

- name: POD_NAMESPACE | |

valueFrom: | |

fieldRef: | |

apiVersion: v1 | |

fieldPath: metadata.namespace | |

- name: MYSQL_SERVICE_HOST | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.host | |

- name: MYSQL_SERVICE_DB_NAME | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.db.name | |

- name: MYSQL_SERVICE_PORT | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.port | |

- name: MYSQL_SERVICE_USER | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.user | |

- name: MYSQL_SERVICE_PASSWORD | |

valueFrom: | |

configMapKeyRef: | |

name: nacos-cm | |

key: mysql.password | |

volumeMounts: | |

- name: data | |

mountPath: /home/nacos/plugins/peer-finder | |

subPath: peer-finder | |

- name: data | |

mountPath: /home/nacos/data | |

subPath: data | |

- name: data | |

mountPath: /home/nacos/logs | |

subPath: logs | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

volumes: | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

volumeClaimTemplates: | |

- metadata: | |

name: data | |

spec: | |

storageClassName: "nfs-storage" | |

accessModes: ["ReadWriteMany"] | |

resources: | |

requests: | |

storage: 5Gi |

# 1.4.5 部署 Nacos-Ingress

# cat 04-nacos-ingress.yaml | |

apiVersion: networking.k8s.io/v1 | |

kind: Ingress | |

metadata: | |

name: nacos-ingress | |

namespace: prod | |

spec: | |

ingressClassName: "nginx" | |

rules: | |

- host: nacos.hmallleasing.com | |

http: | |

paths: | |

- backend: | |

service: | |

name: nacos-svc | |

port: | |

number: 8848 | |

path: / | |

pathType: ImplementationSpecific |

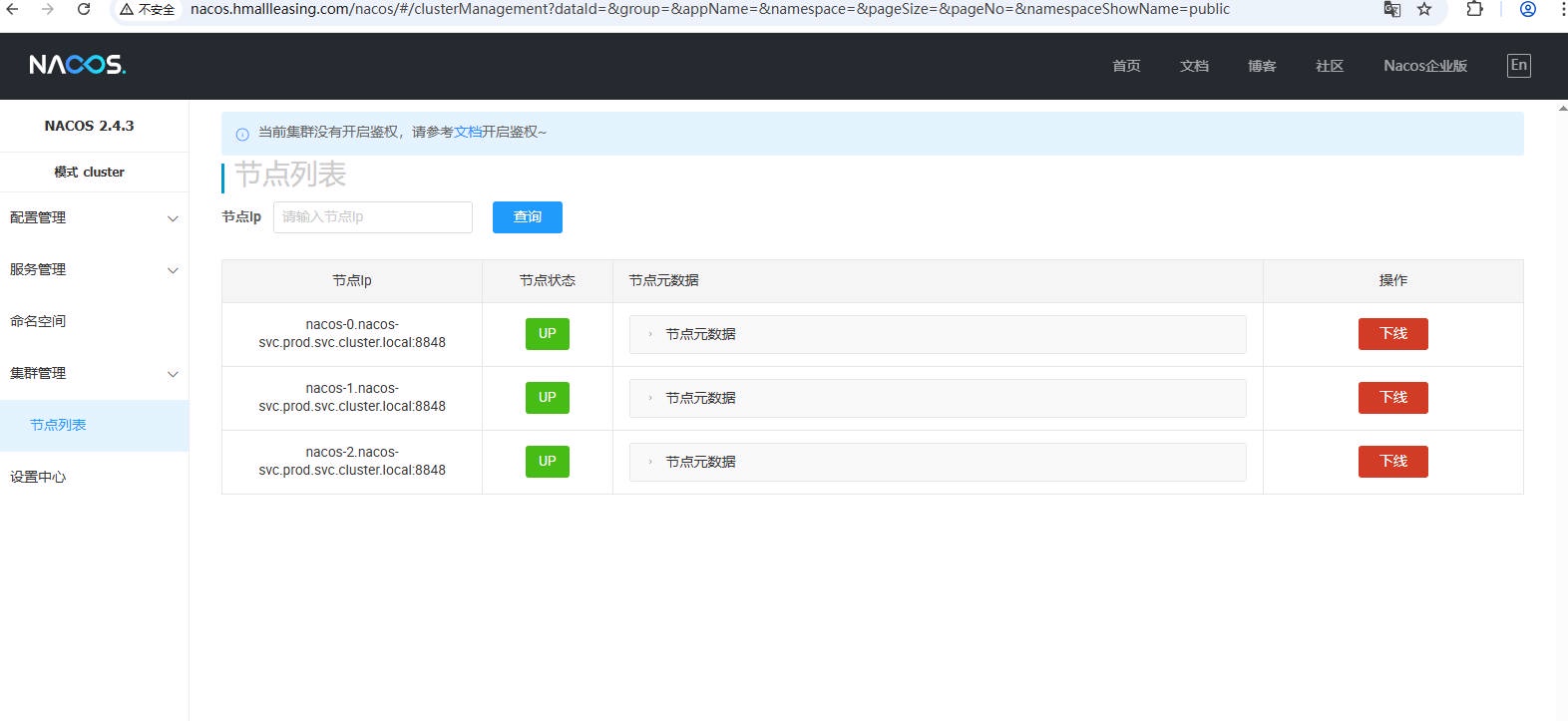

# 1.4.6 更新资源清单

[root@k8s-master01 03-nacos]# sed -i "s#dev#prod#g" *.yaml | |

[root@k8s-master01 03-nacos]# kubectl apply -f . | |

#检查 cluster 是否一致 | |

[root@k8s-master01 03-nacos]# for i in {0..2}; do echo nacos-$i; kubectl exec nacos-$i -c nacos -n prod -- cat conf/cluster.conf; donenacos-0 | |

#2025-05-21T10:43:12.668 | |

nacos-0.nacos-svc.prod.svc.cluster.local:8848 | |

nacos-1.nacos-svc.prod.svc.cluster.local:8848 | |

nacos-2.nacos-svc.prod.svc.cluster.local:8848 | |

nacos-1 | |

#2025-05-21T10:43:14.879 | |

nacos-0.nacos-svc.prod.svc.cluster.local:8848 | |

nacos-1.nacos-svc.prod.svc.cluster.local:8848 | |

nacos-2.nacos-svc.prod.svc.cluster.local:8848 | |

nacos-2 | |

#2025-05-21T10:43:17.299 | |

nacos-0.nacos-svc.prod.svc.cluster.local:8848 | |

nacos-1.nacos-svc.prod.svc.cluster.local:8848 | |

nacos-2.nacos-svc.prod.svc.cluster.local:8848 |

# 1.4.7 Web 访问 nacos

Url:http://nacos.hmallleasing.com/nacos | |

User: nacos | |

Passwd: nacos |

# 1.5 部署 xxl-job

# 1.5.1 部署 xxl-job-MySQL

# cat 01-mysql-xxljob-sts-svc.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: mysql-xxljob-svc | |

namespace: prod | |

spec: | |

clusterIP: None | |

selector: | |

app: mysql | |

role: xxljob | |

ports: | |

- name: tcp-mysql-svc | |

protocol: TCP | |

port: 3306 | |

targetPort: 3306 | |

--- | |

apiVersion: v1 | |

kind: Service | |

apiVersion: v1 | |

metadata: | |

name: mysql-xxljob-external | |

namespace: prod | |

spec: | |

ports: | |

- name: tcp-mysql-external | |

protocol: TCP | |

port: 3306 | |

targetPort: 3306 | |

nodePort: 31206 | |

selector: | |

app: mysql | |

role: xxljob | |

type: NodePort | |

--- | |

apiVersion: apps/v1 | |

kind: StatefulSet | |

metadata: | |

name: mysql-xxljob | |

namespace: prod | |

spec: | |

serviceName: "mysql-xxljob-svc" | |

replicas: 1 | |

selector: | |

matchLabels: | |

app: mysql | |

role: xxljob | |

template: | |

metadata: | |

labels: | |

app: mysql | |

role: xxljob | |

spec: | |

containers: | |

- name: db | |

image: mysql:8.0 | |

args: | |

- "--character-set-server=utf8" | |

env: | |

- name: MYSQL_ROOT_PASSWORD | |

value: Superman*2023 | |

ports: | |

- containerPort: 3306 | |

resources: | |

limits: | |

cpu: 2000m | |

memory: 4000Mi | |

requests: | |

cpu: 200m | |

memory: 500Mi | |

livenessProbe: | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 30 | |

successThreshold: 1 | |

tcpSocket: | |

port: 3306 | |

timeoutSeconds: 2 | |

readinessProbe: | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 30 | |

successThreshold: 1 | |

tcpSocket: | |

port: 3306 | |

timeoutSeconds: 2 | |

volumeMounts: | |

- name: data | |

mountPath: /var/lib/mysql/ | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

volumes: | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

volumeClaimTemplates: | |

- metadata: | |

name: data | |

spec: | |

accessModes: ["ReadWriteMany"] | |

storageClassName: "nfs-storage" | |

resources: | |

requests: | |

storage: 5Gi |

# 1.5.2 导入数据库

xxljob 表结构下载地址:https://gitee.com/xuxueli0323/xxl-job/tree/3.1.0-release/doc/db

[root@k8s-master01 05-xxl-job]# kubectl apply -f 01-mysql-xxljob-sts-svc.yaml | |

[root@k8s-master01 05-xxl-job]# dig @10.96.0.10 mysql-xxljob-svc.prod.svc.cluster.local +short | |

172.16.85.250 | |

[root@k8s-master01 05-xxl-job]# mysql -h 172.16.85.250 -uroot -p"Superman*2023" < tables_xxl_job.sql |

# 1.5.3 部署 xxl-job-Service-Deployment

# cat 02-xxljob-deploy-svc.yaml | |

apiVersion: apps/v1 | |

kind: Deployment | |

metadata: | |

name: xxl-job | |

namespace: prod | |

spec: | |

replicas: 1 | |

selector: | |

matchLabels: | |

app: xxl-job | |

template: | |

metadata: | |

labels: | |

app: xxl-job | |

spec: | |

containers: | |

- image: xuxueli/xxl-job-admin:3.1.0 | |

name: xxl-job | |

ports: | |

- containerPort: 8080 | |

env: | |

- name: PARAMS | |

value: "--spring.datasource.url=jdbc:mysql://mysql-xxljob-svc.prod.svc.cluster.local:3306/xxl_job?useUnicode=true&characterEncoding=UTF-8&autoReconnect=true&serverTimezone=Asia/Shanghai --spring.datasource.username=root --spring.datasource.password=Superman*2023" | |

volumeMounts: | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

resources: | |

limits: | |

cpu: '1' | |

memory: 2000Mi | |

requests: | |

cpu: 100m | |

memory: 500Mi | |

volumes: | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

--- | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: xxljob-svc | |

namespace: prod | |

spec: | |

ports: | |

- port: 8080 | |

protocol: TCP | |

name: http | |

selector: | |

app: xxl-job |

# 1.5.4 部署 xxl-job-service

[root@k8s-master01 05-xxl-job]# cat 04-xxljob-external.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: xxljob-balancer | |

namespace: prod | |

spec: | |

type: NodePort | |

ports: | |

- name: xxljob-balancer | |

protocol: TCP | |

port: 8080 | |

targetPort: 8080 | |

selector: | |

app: xxl-job |

# 1.5.5 部署 xxl-job-Ingress

[root@k8s-master01 05-xxl-job]# cat 03-xxljob-ingress.yaml | |

apiVersion: networking.k8s.io/v1 | |

kind: Ingress | |

metadata: | |

name: xxljob-ingress | |

namespace: prod | |

spec: | |

ingressClassName: "nginx" | |

rules: | |

- host: xxljob.hmallleasing.com | |

http: | |

paths: | |

- backend: | |

service: | |

name: xxljob-svc | |

port: | |

number: 8080 | |

path: / | |

pathType: ImplementationSpecific |

# 1.5.6 更新资源清单

[root@k8s-master01 05-xxl-job]# sed -i "s#dev#prod#g" *.yaml | |

[root@k8s-master01 05-xxl-job]# kubectl apply -f . |

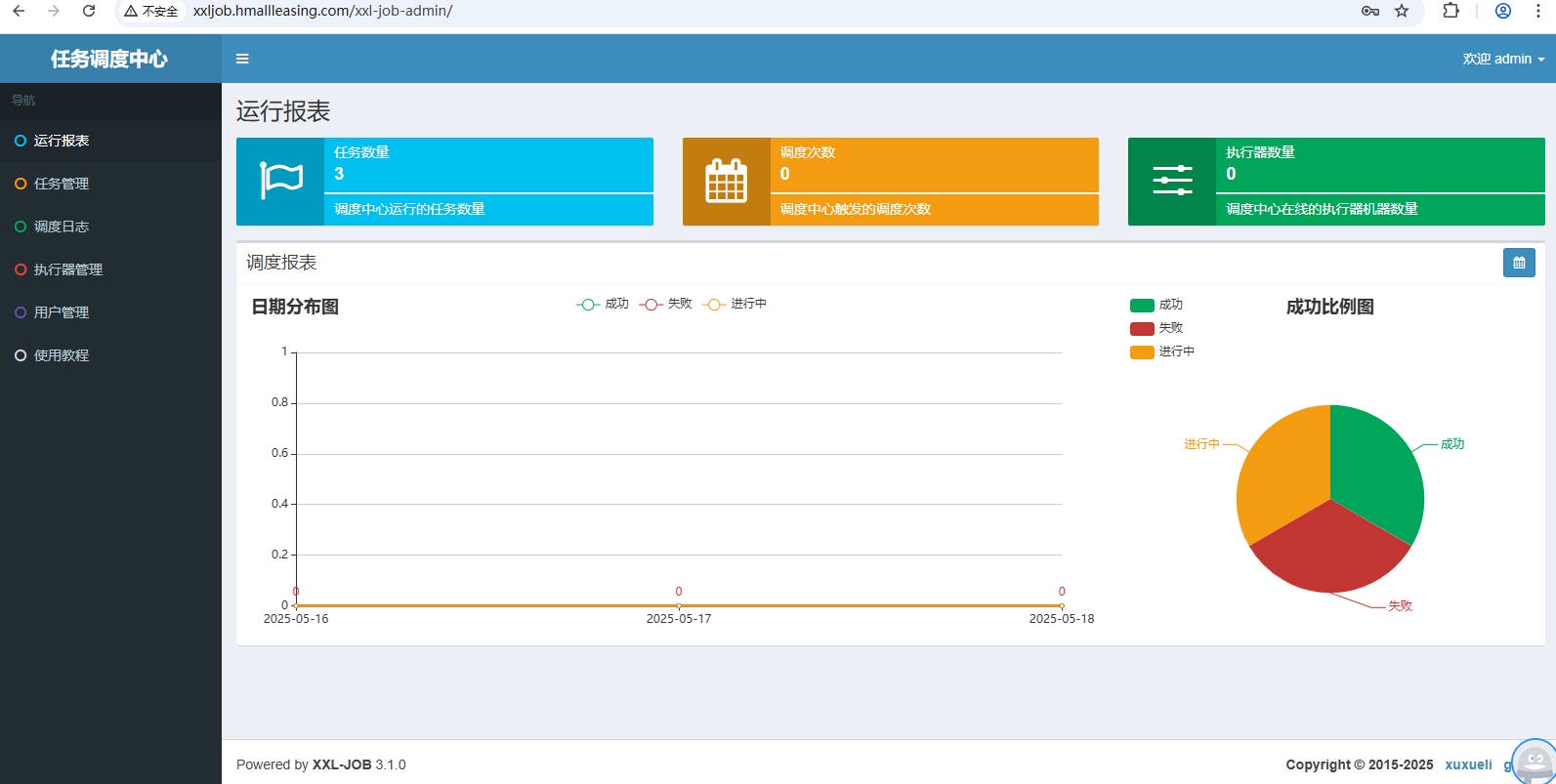

# 1.5.7 Web 访问 xxl-job

http://192.168.40.101:30904/xxl-job-admin/ | |

http://xxljob.hmallleasing.com/xxl-job-admin/ | |

user:admin | |

pwd:1223456 |

# 1.6 部署 rabbitmq 集群

# 1.6.1 创建 RBAC 权限

# cat 01-rabbitmq-rbac.yaml | |

apiVersion: v1 | |

kind: ServiceAccount | |

metadata: | |

name: rabbitmq-cluster | |

namespace: prod | |

--- | |

apiVersion: rbac.authorization.k8s.io/v1 | |

kind: Role | |

metadata: | |

name: rabbitmq-cluster | |

namespace: prod | |

rules: | |

- apiGroups: [""] | |

resources: ["endpoints"] | |

verbs: ["get"] | |

--- | |

kind: RoleBinding | |

apiVersion: rbac.authorization.k8s.io/v1 | |

metadata: | |

name: rabbitmq-cluster | |

namespace: prod | |

roleRef: | |

apiGroup: rbac.authorization.k8s.io | |

kind: Role | |

name: rabbitmq-cluster | |

subjects: | |

- kind: ServiceAccount | |

name: rabbitmq-cluster | |

namespace: prod |

# 1.6.2 创建集群的 Secret

# cat 02-rabbitmq-secret.yaml | |

apiVersion: v1 | |

kind: Secret | |

metadata: | |

name: rabbitmq-secret | |

namespace: prod | |

stringData: | |

password: talent | |

url: amqp://RABBITMQ_USER:RABBITMQ_PASS@rmq-cluster-balancer | |

username: superman | |

type: Opaque |

# 1.6.3 创建 ConfigMap

# cat 03-rabbitmq-cm.yaml | |

apiVersion: v1 | |

kind: ConfigMap | |

metadata: | |

name: rabbitmq-cluster-config | |

namespace: prod | |

labels: | |

addonmanager.kubernetes.io/mode: Reconcile | |

data: | |

enabled_plugins: | | |

[rabbitmq_management,rabbitmq_peer_discovery_k8s]. | |

rabbitmq.conf: | | |

loopback_users.guest = false | |

default_user = RABBITMQ_USER | |

default_pass = RABBITMQ_PASS | |

## Cluster | |

cluster_formation.peer_discovery_backend = rabbit_peer_discovery_k8s | |

cluster_formation.k8s.host = kubernetes.default.svc | |

#cluster_formation.k8s.host = kubernetes.default.svc.cluster.local | |

cluster_formation.k8s.address_type = hostname | |

################################################# | |

# prod is rabbitmq-cluster's namespace# | |

################################################# | |

cluster_formation.k8s.hostname_suffix = .rabbitmq-cluster.prod.svc.cluster.local | |

cluster_formation.node_cleanup.interval = 30 | |

cluster_formation.node_cleanup.only_log_warning = true | |

cluster_partition_handling = autoheal | |

## queue master locator | |

queue_master_locator = min-masters | |

cluster_formation.randomized_startup_delay_range.min = 0 | |

cluster_formation.randomized_startup_delay_range.max = 2 | |

# memory | |

vm_memory_high_watermark.absolute = 100MB | |

# disk | |

disk_free_limit.absolute = 2GB |

注:配置文件 cluster_formation.k8s.host 设置为 kubernetes.default.svc.cluster.local,然后就是各种连不上,后来换上 kubernetes.default.svc 就可以了,不知道是不是 k8s 新版本的问题。

# 1.6.4 创建集群的 svc

# cat 04-rabbitmq-cluster-svc.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

labels: | |

app: rabbitmq-cluster | |

name: rabbitmq-cluster | |

namespace: prod | |

spec: | |

clusterIP: None | |

ports: | |

- name: rmqport | |

port: 5672 | |

targetPort: 5672 | |

- name: http | |

port: 15672 | |

protocol: TCP | |

targetPort: 15672 | |

selector: | |

app: rabbitmq-cluster | |

--- | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

labels: | |

app: rabbitmq-cluster-balancer | |

name: rabbitmq-cluster-balancer | |

namespace: prod | |

spec: | |

ports: | |

- name: rmqport | |

port: 5672 | |

targetPort: 5672 | |

- name: http | |

port: 15672 | |

protocol: TCP | |

targetPort: 15672 | |

selector: | |

app: rabbitmq-cluster | |

type: NodePort |

# 1.6.5 创建 StatefulSet

# cat 05-rabbitmq-cluster-sts.yaml | |

apiVersion: apps/v1 | |

kind: StatefulSet | |

metadata: | |

labels: | |

app: rabbitmq-cluster | |

name: rabbitmq-cluster | |

namespace: prod | |

spec: | |

replicas: 3 | |

selector: | |

matchLabels: | |

app: rabbitmq-cluster | |

serviceName: rabbitmq-cluster | |

template: | |

metadata: | |

labels: | |

app: rabbitmq-cluster | |

spec: | |

affinity: # 避免 Pod 运行到同一个节点上了 | |

podAntiAffinity: | |

requiredDuringSchedulingIgnoredDuringExecution: | |

- labelSelector: | |

matchExpressions: | |

- key: app | |

operator: In | |

values: ["rabbitmq-cluster"] | |

topologyKey: "kubernetes.io/hostname" | |

containers: | |

- args: | |

- -c | |

- cp -v /etc/rabbitmq/rabbitmq.conf ${RABBITMQ_CONFIG_FILE}; exec docker-entrypoint.sh rabbitmq-server | |

command: | |

- sh | |

env: | |

- name: RABBITMQ_DEFAULT_USER | |

valueFrom: | |

secretKeyRef: | |

key: username | |

name: rabbitmq-secret | |

- name: RABBITMQ_DEFAULT_PASS | |

valueFrom: | |

secretKeyRef: | |

key: password | |

name: rabbitmq-secret | |

- name: TZ | |

value: 'Asia/Shanghai' | |

- name: RABBITMQ_ERLANG_COOKIE | |

value: 'SWvCP0Hrqv43NG7GybHC95ntCJKoW8UyNFWnBEWG8TY=' | |

- name: K8S_SERVICE_NAME | |

value: rabbitmq-cluster | |

- name: POD_IP | |

valueFrom: | |

fieldRef: | |

fieldPath: status.podIP | |

- name: POD_NAME | |

valueFrom: | |

fieldRef: | |

fieldPath: metadata.name | |

- name: POD_NAMESPACE | |

valueFrom: | |

fieldRef: | |

fieldPath: metadata.namespace | |

- name: RABBITMQ_USE_LONGNAME | |

value: "true" | |

- name: RABBITMQ_NODENAME | |

value: rabbit@$(POD_NAME).$(K8S_SERVICE_NAME).$(POD_NAMESPACE).svc.cluster.local | |

- name: RABBITMQ_CONFIG_FILE | |

value: /var/lib/rabbitmq/rabbitmq.conf | |

image: rabbitmq:3.9-management | |

imagePullPolicy: IfNotPresent | |

name: rabbitmq | |

ports: | |

- containerPort: 15672 | |

name: http | |

protocol: TCP | |

- containerPort: 5672 | |

name: amqp | |

protocol: TCP | |

livenessProbe: | |

exec: | |

command: ["rabbitmq-diagnostics", "status"] | |

initialDelaySeconds: 60 | |

periodSeconds: 60 | |

failureThreshold: 2 | |

timeoutSeconds: 10 | |

readinessProbe: | |

exec: | |

command: ["rabbitmq-diagnostics", "status"] | |

failureThreshold: 2 | |

initialDelaySeconds: 60 | |

periodSeconds: 60 | |

timeoutSeconds: 10 | |

volumeMounts: | |

- mountPath: /etc/rabbitmq | |

name: config-volume | |

readOnly: false | |

- mountPath: /var/lib/rabbitmq | |

name: rabbitmq-storage | |

readOnly: false | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

serviceAccountName: rabbitmq-cluster | |

terminationGracePeriodSeconds: 30 | |

volumes: | |

- name: config-volume | |

configMap: | |

items: | |

- key: rabbitmq.conf | |

path: rabbitmq.conf | |

- key: enabled_plugins | |

path: enabled_plugins | |

name: rabbitmq-cluster-config | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

volumeClaimTemplates: | |

- metadata: | |

name: rabbitmq-storage | |

spec: | |

accessModes: | |

- ReadWriteMany | |

storageClassName: "nfs-storage" | |

resources: | |

requests: | |

storage: 5Gi |

# 1.6.6 创建 Ingress

[root@k8s-master01 04-rabbitmq]# cat 06-rabbitmq-ingress.yaml | |

apiVersion: networking.k8s.io/v1 | |

kind: Ingress | |

metadata: | |

name: rabbitmq-ingress | |

namespace: prod | |

spec: | |

ingressClassName: "nginx" | |

rules: | |

- host: rabbitmq.hmallleasing.com | |

http: | |

paths: | |

- backend: | |

service: | |

name: rabbitmq-cluster | |

port: | |

number: 15672 | |

path: / | |

pathType: ImplementationSpecific |

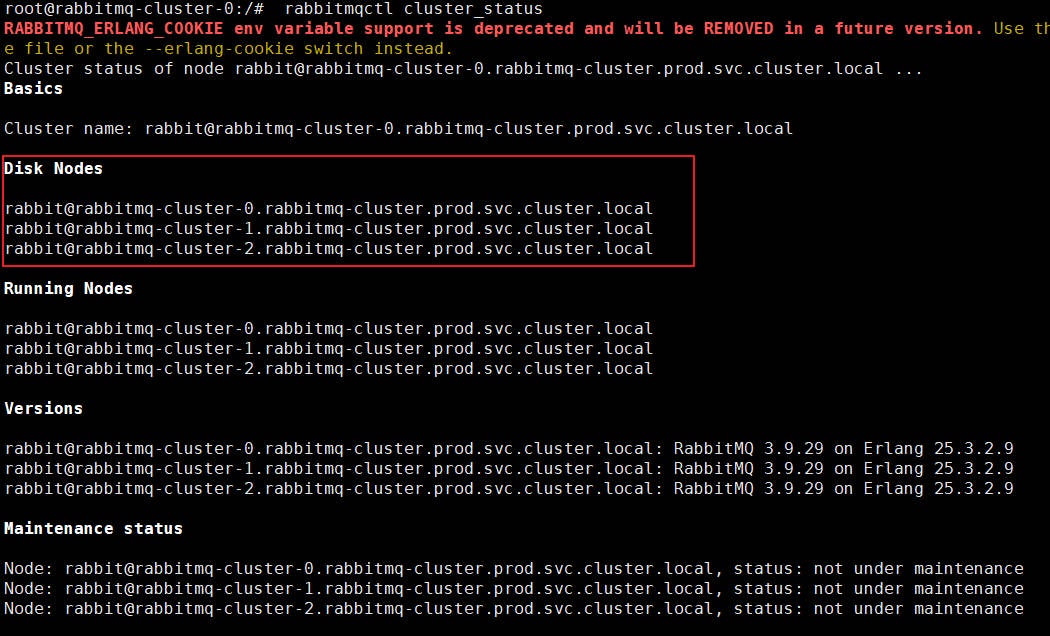

# 1.6.7 更新资源清单

[root@k8s-master01 04-rabbitmq]# sed -i "s#dev#prod#g" *.yaml | |

[root@k8s-master01 04-rabbitmq]# kubectl apply -f . | |

[root@k8s-master01 04-rabbitmq]# kubectl get pods -n prod | |

NAME READY STATUS RESTARTS AGE | |

rabbitmq-cluster-0 1/1 Running 0 9m53s | |

rabbitmq-cluster-1 1/1 Running 0 8m47s | |

rabbitmq-cluster-2 1/1 Running 0 7m40s | |

[root@k8s-master01 04-rabbitmq]# kubectl exec -it rabbitmq-cluster-0 -n prod -- /bin/bash | |

root@rabbitmq-cluster-0:/# rabbitmqctl cluster_status | |

RABBITMQ_ERLANG_COOKIE env variable support is deprecated and will be REMOVED in a future version. Use the $HOME/.erlang.cookie file or the --erlang-cookie switch instead. | |

Cluster status of node rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local ... | |

Basics | |

Cluster name: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local | |

Disk Nodes | |

rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local | |

rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local | |

rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local | |

Running Nodes | |

rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local | |

rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local | |

rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local | |

Versions | |

rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local: RabbitMQ 3.9.29 on Erlang 25.3.2.9 | |

rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local: RabbitMQ 3.9.29 on Erlang 25.3.2.9 | |

rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local: RabbitMQ 3.9.29 on Erlang 25.3.2.9 | |

Maintenance status | |

Node: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local, status: not under maintenance | |

Node: rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local, status: not under maintenance | |

Node: rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local, status: not under maintenance | |

Alarms | |

Memory alarm on node rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local | |

Memory alarm on node rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local | |

Memory alarm on node rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local | |

Network Partitions | |

(none) | |

Listeners | |

Node: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 15672, protocol: http, purpose: HTTP API | |

Node: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication | |

Node: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0 | |

Node: rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 15672, protocol: http, purpose: HTTP API | |

Node: rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication | |

Node: rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0 | |

Node: rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 15672, protocol: http, purpose: HTTP API | |

Node: rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication | |

Node: rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0 | |

Feature flags | |

Flag: drop_unroutable_metric, state: enabled | |

Flag: empty_basic_get_metric, state: enabled | |

Flag: implicit_default_bindings, state: enabled | |

Flag: maintenance_mode_status, state: enabled | |

Flag: quorum_queue, state: enabled | |

Flag: stream_queue, state: enabled | |

Flag: user_limits, state: enabled | |

Flag: virtual_host_metadata, state: enabled |

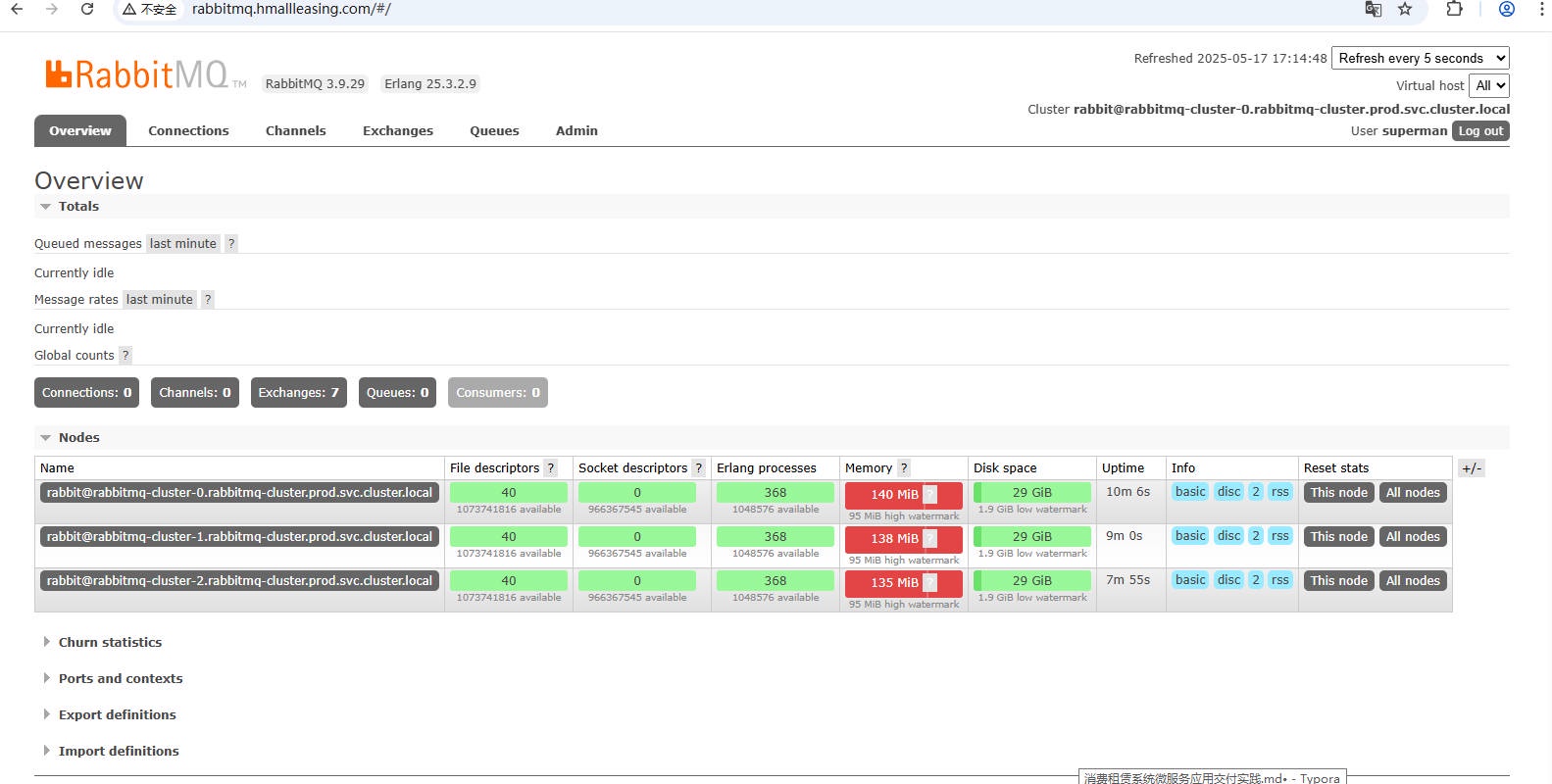

# 1.6.8 Web 访问 rabbitmq

http://rabbitmq.hmallleasing.com/#/ | |

user:superman | |

pwd:talent |

# 1.6.8 rabbitMQ 全部挂了,无法重启解决方案

Kubernetes 环境中,遇到 RabbitMQ 集群无法启动的问题。原因是 RabbitMQ 所有实例均失效,需要在每个 Pod 对应的持久化存储路径下创建 force_load 文件来强制启动。通过获取 PV 存储路径,在指定目录创建该文件后,重新启动 RabbitMQ 服务,成功解决了集群启动问题

[root@k8s-node02 ~# cd /data/dev-rabbitmq-storage-rabbitmq-cluster-0-pvc-3abca920-3c68-44eb-b0fd-406a4358b153/mnesia/rabbit@rabbitmq-cluster-0.rabbitmq-cluster.dev.svc.cluster.local | |

[root@k8s-node02 rabbit@rabbitmq-cluster-0.rabbitmq-cluster.dev.svc.cluster.local]# touch force_load |

# 1.7 部署 rabbitmq-single

[root@k8s-master01 04-rabbitmq]# cat 06-rabbitmq-single.yaml | |

apiVersion: v1 | |

kind: PersistentVolumeClaim | |

metadata: | |

name: rabbitmq-single-data | |

namespace: prod | |

spec: | |

storageClassName: "nfs-storage" # 明确指定使用哪个 sc 的供应商来创建 pv | |

accessModes: | |

- ReadWriteOnce | |

resources: | |

requests: | |

storage: 1Gi # 根据业务实际大小进行资源申请 | |

--- | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: rabbitmq-single-svc | |

namespace: prod | |

labels: | |

name: rabbitmq-single-svc | |

spec: | |

ports: | |

- port: 5672 | |

protocol: TCP | |

name: web | |

targetPort: 5672 | |

- name: http | |

port: 15672 | |

protocol: TCP | |

targetPort: 15672 | |

selector: | |

app: rabbitmq-single | |

--- | |

apiVersion: networking.k8s.io/v1 # k8s >= 1.22 必须 v1 | |

kind: Ingress | |

metadata: | |

name: rabbitmq-single-ingress | |

namespace: prod | |

spec: | |

ingressClassName: nginx | |

rules: | |

- host: rabbitmq.hmallleasing.com | |

http: | |

paths: | |

- backend: | |

service: | |

name: rabbitmq-single-svc | |

port: | |

number: 15672 | |

path: / | |

pathType: Prefix | |

--- | |

apiVersion: apps/v1 | |

kind: Deployment | |

metadata: | |

name: rabbitmq-single | |

namespace: prod | |

spec: | |

replicas: 1 | |

selector: | |

matchLabels: | |

app: rabbitmq-single | |

template: | |

metadata: | |

labels: | |

app: rabbitmq-single | |

spec: | |

containers: | |

- name: rabbitmq-single | |

image: rabbitmq:3.9-management | |

ports: | |

- containerPort: 5672 | |

name: web | |

protocol: TCP | |

- containerPort: 15672 | |

name: http | |

protocol: TCP | |

env: | |

- name: RABBITMQ_DEFAULT_USER # 自定义环境变量 | |

value: admin | |

- name: RABBITMQ_DEFAULT_PASS | |

value: Superman*2025 | |

resources: | |

requests: | |

memory: "1Gi" | |

cpu: "500m" | |

livenessProbe: | |

exec: | |

command: ["rabbitmqctl", "status"] | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 10 | |

readinessProbe: | |

exec: | |

command: ["rabbitmqctl", "status"] | |

failureThreshold: 2 | |

initialDelaySeconds: 30 | |

periodSeconds: 10 | |

volumeMounts: | |

- name: timezone | |

mountPath: /etc/timezone | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: rabbitmq-storage | |

mountPath: /var/lib/rabbitmq | |

volumes: | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: File | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: File | |

- name: rabbitmq-storage | |

persistentVolumeClaim: | |

claimName: rabbitmq-single-data |

# 二、微服务配置

# 2.1 代码编译前配置

每个微服务需要配置

[root@jenkins nf-flms]# cat nf-flms-order/src/main/resources/bootstrap-prd.yml | |

info: | |

groupId: @project.groupId@ | |

artifactId: @project.artifactId@ | |

version: @project.version@ | |

name: 南方手机租赁平台 | |

copyright: 2021 | |

description: 诚信 务实 专注 专业 创新 | |

server: | |

port: 8080 | |

spring: | |

application: | |

name: @artifactId@ | |

profiles: | |

active: prd | |

cloud: | |

nacos: | |

discovery: | |

server-addr: nacos-svc.prod.svc.cluster.local:8848 | |

namespace: 1d994267-0b13-45aa-bdbd-b810a37725ef | |

group: nf-flms | |

config: | |

server-addr: ${spring.cloud.nacos.discovery.server-addr} | |

namespace: ${spring.cloud.nacos.discovery.namespace} | |

group: ${spring.cloud.nacos.discovery.group} | |

file-extension: yml | |

shared-configs: | |

- data-id: nf-flms-application-${spring.profiles.active}.${spring.cloud.nacos.config.file-extension} | |

group: nf-flms | |

refresh: true |

# 2.2 导入 mvn 本地依赖

[root@jenkins repository]# ll ~/.m2/repository | |

total 8 | |

drwxr-xr-x 3 root root 25 Jun 20 2023 aopalliance | |

drwxr-xr-x 4 root root 35 Jun 20 2023 asm | |

drwxr-xr-x 3 root root 30 Jun 20 2023 avalon-framework | |

drwxr-xr-x 3 root root 38 Mar 29 2023 backport-util-concurrent | |

drwxr-xr-x 3 root root 17 Mar 29 2023 ch | |

drwxr-xr-x 3 root root 25 Mar 29 2023 classworlds | |

drwxr-xr-x 6 root root 62 Mar 29 2023 cn | |

drwxr-xr-x 36 root root 4096 Jun 20 2023 com | |

drwxr-xr-x 4 root root 61 Jun 20 2023 commons-beanutils | |

drwxr-xr-x 3 root root 25 Mar 29 2023 commons-cli | |

drwxr-xr-x 3 root root 27 Mar 29 2023 commons-codec | |

drwxr-xr-x 3 root root 33 Mar 29 2023 commons-collections | |

drwxr-xr-x 3 root root 35 Mar 29 2023 commons-configuration | |

drwxr-xr-x 3 root root 26 Mar 29 2023 commons-dbcp | |

drwxr-xr-x 3 root root 30 Jun 20 2023 commons-digester | |

drwxr-xr-x 3 root root 24 Jun 20 2023 commons-el | |

drwxr-xr-x 3 root root 32 Mar 29 2023 commons-fileupload | |

drwxr-xr-x 3 root root 32 Jun 20 2023 commons-httpclient | |

drwxr-xr-x 3 root root 24 Mar 29 2023 commons-io | |

drwxr-xr-x 3 root root 26 Mar 29 2023 commons-lang | |

drwxr-xr-x 3 root root 29 Mar 29 2023 commons-logging | |

drwxr-xr-x 3 root root 25 Jun 20 2023 commons-net | |

drwxr-xr-x 3 root root 26 Mar 29 2023 commons-pool | |

drwxr-xr-x 3 root root 24 Mar 29 2023 concurrent | |

drwxr-xr-x 3 root root 25 Mar 29 2023 de | |

drwxr-xr-x 3 root root 19 Mar 29 2023 dom4j | |

drwxr-xr-x 3 root root 28 Mar 29 2023 doxia | |

drwxr-xr-x 17 root root 251 Mar 29 2023 io | |

drwxr-xr-x 8 root root 96 Jun 20 2023 jakarta | |

drwxr-xr-x 14 root root 175 Jun 20 2023 javax | |

drwxr-xr-x 3 root root 23 Mar 29 2023 joda-time | |

drwxr-xr-x 3 root root 19 Mar 29 2023 junit | |

drwxr-xr-x 3 root root 19 Mar 29 2023 log4j | |

drwxr-xr-x 3 root root 20 Jun 20 2023 logkit | |

drwxr-xr-x 3 root root 20 Jun 20 2023 math | |

drwxr-xr-x 3 root root 18 Jun 20 2023 me | |

drwxr-xr-x 3 root root 34 Mar 29 2023 mysql | |

drwxr-xr-x 13 root root 167 Mar 29 2023 net | |

drwxr-xr-x 3 root root 18 Mar 29 2023 ognl | |

drwxr-xr-x 56 root root 4096 Jun 20 2023 org | |

drwxr-xr-x 3 root root 21 Mar 29 2023 redis | |

drwxr-xr-x 3 root root 22 Mar 29 2023 stax | |

drwxr-xr-x 5 root root 72 Jun 20 2023 tomcat | |

drwxr-xr-x 3 root root 19 Jun 20 2023 xalan | |

drwxr-xr-x 3 root root 24 Mar 29 2023 xerces | |

drwxr-xr-x 4 root root 42 Jun 20 2023 xml-apis | |

drwxr-xr-x 3 root root 20 Jun 20 2023 xmlenc |

# 2.3 mvn 打包代码

[root@jenkins nf-flms]# pwd | |

/root/qzj-system-back/nf-flms | |

[root@jenkins nf-flms]# mvn -B -U clean package -Dmaven.test.skip=true -Dautoconfig.skip |

# 2.4 Nacos 配置

# 2.4.1 nf-flms-application-prd.yml

timedigit: | |

mq: | |

qos: 10 | |

maxRetries : 1 | |

security: | |

jwt: | |

issuer: nf-fmls | |

#到期时间,单位毫秒 | |

expiration: {SYSTEM: 1800000, ALIPAY_MEMBER: 31536000000, SALES_MEMBER: 31536000000} | |

maxRetries: 5 | |

patterns: | |

- /** | |

feign: | |

allowHeads: | |

- SOURCE_TYPE | |

spring: | |

rabbitmq: | |

host: rabbitmq-cluster.prod.svc.cluster.local | |

port: 5672 | |

username: superman | |

password: talent | |

publisher-confirms: true | |

publisher-returns: true | |

virtual-host: / | |

listener: | |

simple: | |

acknowledge-mode: manual | |

concurrency: 1 | |

max-concurrency: 5 | |

retry: | |

enabled: true | |

klock: | |

# redis 地址 | |

address: redis-0.redis-svc.prod.svc.cluster.local:6379 | |

# redis 密码 | |

password: Superman*2023 | |

# redis 数据索引 | |

database: 8 | |

# 获取锁最长阻塞时间(默认:60,单位:秒) | |

waitTime: 5 | |

# 已获取锁后自动释放时间(默认:60,单位:秒) | |

leaseTime: 60 | |

jetcache: | |

statIntervalMinutes: 15 | |

areaInCacheName: false | |

hiddenPackages: com.timedigit | |

local: | |

default: | |

type: linkedhashmap | |

keyConvertor: fastjson | |

remote: | |

default: | |

type: redis | |

keyConvertor: fastjson | |

valueEncoder: java | |

valueDecoder: java | |

poolConfig: | |

minIdle: 5 | |

maxIdle: 20 | |

maxTotal: 50 | |

host: redis-0.redis-svc.prod.svc.cluster.local | |

port: 6379 | |

password: Superman*2023 | |

database: 8 | |

# feign 配置 | |

feign: | |

client: | |

config: | |

default: | |

connectTimeout: 30000 | |

readTimeout: 30000 | |

#ribbon 请求处理的超时时间 | |

ribbon: | |

#全局请求连接的超时时间 | |

ReadTimeout: 30000 | |

#全局请求的超时时间 | |

ConnectTimeout: 30000 | |

xgyq: | |

url: https://qiaozuji-flms-api.test.qiaozuji.com | |

accessKey: 7d82b81b9b6075f7 | |

secretKey: 17b85704043f57759983af5a744d82c0 | |

path: /data/ | |

ant: | |

#正式参数 | |

blockchainv3: | |

endpoint: https://openapi.alipay.com/gateway.do | |

appId: '2021002169667748' | |

privateKey: MIIEvgIBADANBgkqhkiG9w0BAQEFAASCBKgwggSkAgEAAoIBAQCxCce/eHKuTv+joeDwQv1a2Po0HsoJWdnBK8DMlflC3P8bUbPLom690tIWSo1JQyAFEFDGnE3Wqc/GFEySNZvHJ693YRiyLoLtBTJw9i/yH8aINX8fQ+SbM9SxyiMl9wqc+16hxp93Rar7zZY9UbMI3bRhRquPA7rGm/66I5BldDaL1Hnh1ZQ2WwNMOpiw5vAN8ulqm3HAkUC4pReG+SCtgzu6jj2pfbViakXDCO0G7JdNKUI2G4Bhstx3V+mLYKD7+jRwaVWyZDmtiFfjPDXx2u9UYFYDrfLZb7FBs9EjzLO5z6qPerjxu5T8P5qKmsXpmyu6hfj1R3pKwILTIGGzAgMBAAECggEBAJt9U4q/Zzng+HXnP4DF1W9tEpOkVx5PZAldPEBzmDE5mHWOFLPNPiZKe2pIoD6wTfcklU1bCqJ3Ep2ORpJDs0X/fQUEqoQUhblWzy6XixTFA8Gt+rCjGK2XoD9moeg+SXwG6t57bKN89OejcUj58Jzg3ARz5Un+pJS7fcZOZgwzxoyDnhnfe7mEcN8bkuy/2PhRkZWcTkY6ND/Ey5VHk8dqjIQ7uLf3TEilL+mNdcNoguju3I0yhAEtJlhsefeKKcFADWxWZRY129RJPn22TlHNk6h5GmCktnNthaMH1yDWq7mhi/2dUYqE6KlKD37zveQnVUtR6OtMV0wcFeKqY8ECgYEA5aIDJzRicxKdbuX6+aIoGHkH3iU6gfHQRUdI4FGf3+ZqfZ3AKbsBQ5EtzIns9HXVLsPdqq7ZTeFcAeIR7hdeiTWec+fxTu0VROu4z8SMQFQQW2QlvBMfNcPhACOlCFw2aZGbsaqwFmXqshxOBeqzR57MYvTAERvtgN6NxZm0Ar8CgYEAxV3DnOi8uFnttYtf4jI06Fba2LcRJgVL10jUZBDdZLfgMCcWLVHKYc50VQIK7lnzH7uP8+mJEVwT7fMWAmi1+x4XX2o+hbx4rsLbAwTMlWgnj3bFtR1/3r4VW8IF2BN9U95+KuxdQ9UMCsStVYlL4RldEDS3Q2jSwfyRX7B5Qg0CgYAVogOmB9tWd+R49BWGuu4IEC7bkKpIX519SU/mQgpLr4tMtjXKOKHP2bd003GNPiSNOUqCr+Is4hQm4UNLKMxxJKn+xVUIWHFugr5wZFXKIaFA2thrNWn1SLTDrJf5h6Zgn6UJQclA8uz/RodbK1ckYiNjFyeY9QaU42J7wRUiRQKBgQCKxigh7x+rPEhBS3Oq94RuDYwpr2cWZcjy4hm9FoKlLAktsn4MdaMo7GKt1xbai1LA8EAC0CV5mFXHDRJftUKoBHuIsoqtvFza/NXEJJ65Oxf97xSLCef8NYmNEDrNuL55t0rdYX8ej/G8rJf4OeapqwzdtUNa2Zy/m5iYQNyyDQKBgDYtXji8LJFtuF5hOVLC5X9xZKUCiFnblD9Rkv2P5OwyfFkq8tQq1a122ha/8Vwyuv4M/C4yfeEVPbE45OMBxlcggLbtSyubEdno1etmNBftOSHeG7xXjoNqJqP7cRiFE3EgjHsbDOCiED7YCk0RsFnvXmCqL8jgUJUsuXQFZwMK | |

alipayPublicKey: MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAgi1cntxez3ul/BPoOBpp2/4VczWnBZ2Hv1+r7hbsOjYqflOsBQYid/p8bN7WlyZ6QgwQO32288mORWH6scXBDAMc5g+nn4rBhSOqDHh0ZxVnf+RTqSMpp+207DcCO8MbEP5EucpYOsTIvOqufSY7QkTMcaNfaYxxTPLi20Y+VKY/EsB+m8UpY93f9cxKl8vwmpJUfOtU3ENxKfrAZhm1+h4QcFy5W7ERae1Htk40bMLCvWFCrNkhTXQ0LY9bJAPLGlr8zqv0Vb7PxauNdStgIuM7SPdFQ+ZJisj7kbvfeUbPFWMRRBJaBJJWmZYWsKP7RF1f7wSocZxodEUHyJp8IwIDAQAB | |

sceneCode: NFZL | |

subSceneCode: '0000000000025599' | |

siteUserId: '2088731937333632' | |

notifyUrl: | |

timeout: 25h |

# 2.4.2 nf-flms-gateway-prd.yml

spring: | |

cloud: | |

gateway: | |

discovery: | |

locator: | |

lowerCaseServiceId: true | |

enabled: true | |

routes: | |

- id: nf-flms-system | |

uri: lb://nf-flms-system | |

order: 1 | |

predicates: | |

- Path=/system/** | |

filters: | |

- StripPrefix=1 | |

- id: nf-flms-order | |

uri: lb://nf-flms-order | |

order: 2 | |

predicates: | |

- Path=/order/** | |

filters: | |

- StripPrefix=1 | |

- id: nf-flms-statistics | |

uri: lb://nf-flms-statistics | |

order: 3 | |

predicates: | |

- Path=/statistics/** | |

filters: | |

- StripPrefix=1 | |

- id: nf-flms-openapi | |

uri: lb://nf-flms-openapi | |

order: 4 | |

predicates: | |

- Path=/openapi/** | |

filters: | |

- StripPrefix=1 |

# 2.4.3 nf-flms-order-prd.yml

spring: | |

datasource: | |

url: jdbc:mysql://mysql-nf-flms-0.mysql-nf-flms-svc.prod.svc.cluster.local:3306/nf-flms?serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8&useSSL=false | |

username: root | |

password: Superman*2023 | |

swagger: | |

enabled: false | |

title: 订单服务接口 | |

description: 订单服务接口 | |

version: 1.0.0.SNAPSHOT | |

base-package: com.timedigit | |

authorization: | |

name: Authorization | |

key-name: Authorization | |

auth-regex: ^.*$ | |

prometheus: | |

enabled: false | |

project-enviroment: ${spring.profiles.active} | |

project-name: ${spring.application.name} | |

# 档案 | |

document: | |

path: /data/ | |

timedigit: | |

job: | |

adminAddresses: http://xxljob-svc.prod.svc.cluster.local:8080/xxl-job-admin | |

appName: nf-flms | |

ip: | |

port: 9996 | |

logPath: /logs/jobhandler | |

accessToken: | |

logRetentionDays: 5 | |

sign: | |

saas: | |

#appId: 7438855101 | |

#appKey: c8def27d26d9493d745cfba4a96fa3b5 | |

appId: 7438905950 | |

appKey: 9554565359962695be2842dda660587a | |

host: https://smlopenapi.esign.cn | |

bairong: | |

apiCode: 3030942 | |

appKey: f997bd90b4457e7407b249a228d903e35f71acf7a65544c08620fa41e32e1867 | |

url: https://sandbox-api2.100credit.cn | |

strategyId: STR_BR0003107 | |

confId: MCP_BR0001643 | |

befor: | |

path: /strategy_api/v3/hx_query | |

valid: | |

path: /infoverify/v3/info_verify | |

knife4j: | |

enable: true | |

setting: | |

language: zh-CN | |

enableVersion: true | |

enableSearch: true | |

enableFooter: false | |

enableFooterCustom: true | |

footerCustomContent: Copyright 2020-[深圳市租享生活科技有限公司](https://www.qiaozuji.com) | |

basic: | |

enable: false |

# 2.4.4 nf-flms-statistics-prd.yml

spring: | |

datasource: | |

url: jdbc:mysql://mysql-nf-flms-0.mysql-nf-flms-svc.prod.svc.cluster.local:3306/nf-flms?serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8&useSSL=false | |

username: root | |

password: Superman*2023 | |

swagger: | |

enabled: false | |

title: 统计服务接口 | |

description: 统计服务接口 | |

version: 1.0.0.SNAPSHOT | |

base-package: com.timedigit | |

authorization: | |

name: Authorization | |

key-name: Authorization | |

auth-regex: ^.*$ | |

prometheus: | |

enabled: false | |

project-enviroment: ${spring.profiles.active} | |

project-name: ${spring.application.name} | |

timedigit: | |

job: | |

adminAddresses: http://xxljob-svc.prod.svc.cluster.local:8080/xxl-job-admin | |

appName: nf-flms-statistics | |

ip: | |

port: 9996 | |

logPath: /logs/jobhandler | |

accessToken: | |

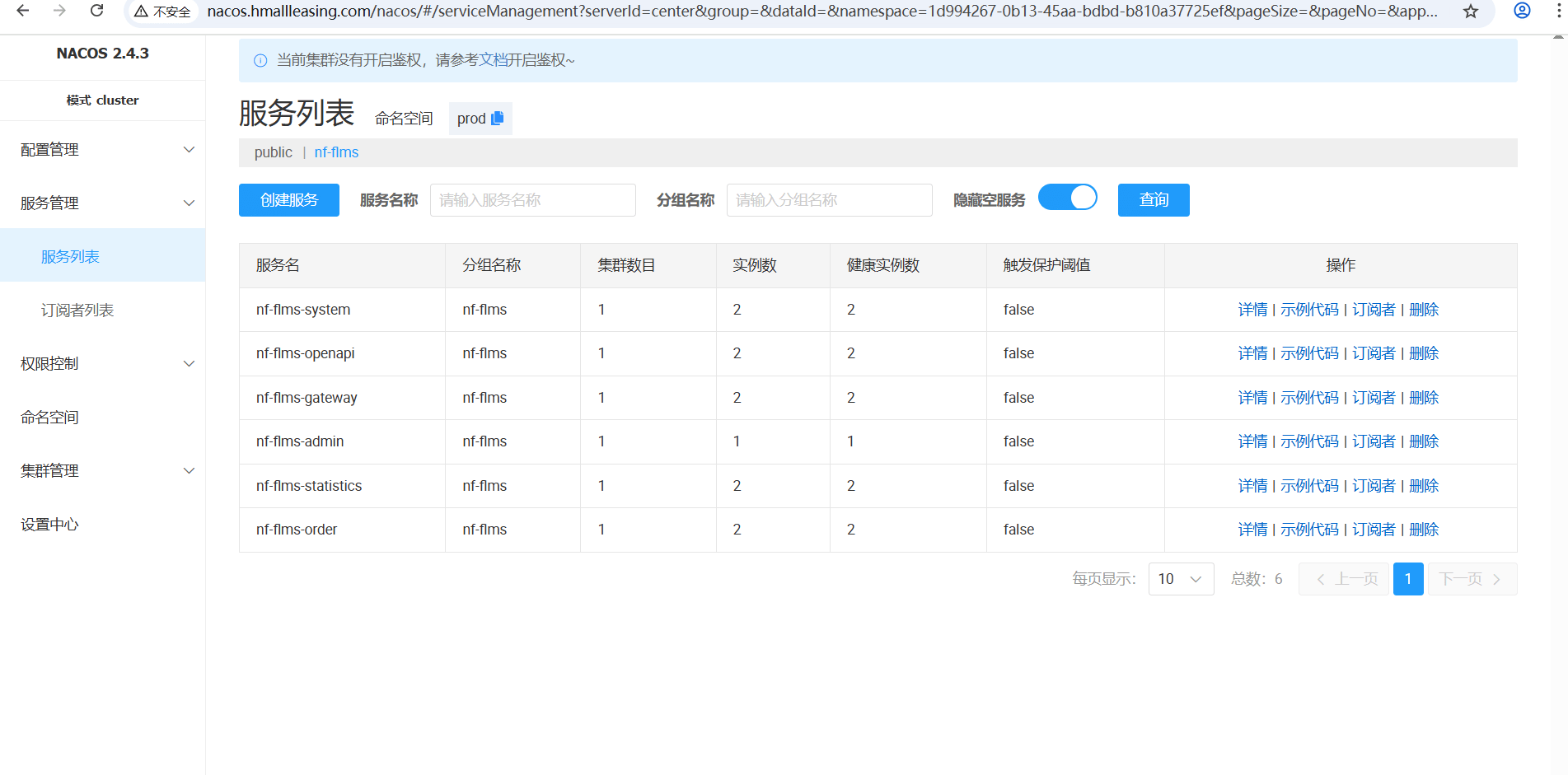

logRetentionDays: 5 | |

knife4j: | |

enable: true | |

setting: | |

language: zh-CN | |

enableVersion: true | |

enableSearch: true | |

enableFooter: false | |

enableFooterCustom: true | |

footerCustomContent: Copyright 2020-[深圳市租享生活科技有限公司](https://www.qiaozuji.com) | |

basic: | |

enable: false |

# 2.4.5 nf-flms-system-prd.yml

spring: | |

datasource: | |

url: jdbc:mysql://mysql-nf-flms-0.mysql-nf-flms-svc.prod.svc.cluster.local:3306/nf-flms?serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8&useSSL=false | |

username: root | |

password: Superman*2023 | |

swagger: | |

enabled: false | |

title: 系统服务接口 | |

description: 系统服务接口 | |

version: 1.0.0.SNAPSHOT | |

base-package: com.timedigit | |

authorization: | |

name: Authorization | |

key-name: Authorization | |

auth-regex: ^.*$ | |

prometheus: | |

enabled: false | |

project-enviroment: ${spring.profiles.active} | |

project-name: ${spring.application.name} | |

knife4j: | |

enable: true | |

setting: | |

language: zh-CN | |

enableVersion: true | |

enableSearch: true | |

enableFooter: false | |

enableFooterCustom: true | |

footerCustomContent: Copyright 2020-[深圳市租享生活科技有限公司](https://www.qiaozuji.com) | |

basic: | |

enable: false |

# 2.4.6 nf-flms-openapi-prd.yml

spring: | |

datasource: | |

url: jdbc:mysql://mysql-nf-flms-0.mysql-nf-flms-svc.prod.svc.cluster.local:3306/nf-flms?serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8&useSSL=false | |

username: root | |

password: Superman*2023 | |

swagger: | |

enabled: false | |

title: 订单服务接口 | |

description: 订单服务接口 | |

version: 1.0.0.SNAPSHOT | |

base-package: com.timedigit | |

authorization: | |

name: Authorization | |

key-name: Authorization | |

auth-regex: ^.*$ | |

prometheus: | |

enabled: false | |

project-enviroment: ${spring.profiles.active} | |

project-name: ${spring.application.name} | |

timedigit: | |

job: | |

adminAddresses: http://xxljob-svc.prod.svc.cluster.local:8080/xxl-job-admin | |

appName: nf-flms | |

ip: | |

port: 9996 | |

logPath: /logs/jobhandler | |

accessToken: | |

logRetentionDays: 5 | |

#蚂蚁区块链代扣 | |

ant: | |

blockchainv2: | |

callbackKey: MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAgi1cntxez3ul/BPoOBpp2/4VczWnBZ2Hv1+r7hbsOjYqflOsBQYid/p8bN7WlyZ6QgwQO32288mORWH6scXBDAMc5g+nn4rBhSOqDHh0ZxVnf+RTqSMpp+207DcCO8MbEP5EucpYOsTIvOqufSY7QkTMcaNfaYxxTPLi20Y+VKY/EsB+m8UpY93f9cxKl8vwmpJUfOtU3ENxKfrAZhm1+h4QcFy5W7ERae1Htk40bMLCvWFCrNkhTXQ0LY9bJAPLGlr8zqv0Vb7PxauNdStgIuM7SPdFQ+ZJisj7kbvfeUbPFWMRRBJaBJJWmZYWsKP7RF1f7wSocZxodEUHyJp8IwIDAQAB | |

#callbackKey: MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEAigh2X40D7Rm6zu3UFkFVnnLU0higr3Q/IRN+qyAOO2CGddQ1xhyqNr82mYUHcUvKUkTV/QDi3yvfMfo2DUaffURWab0Ucth02mz70Jknse/BmkZLX0r3jiJGDErbwP1xb149GwkgM3ffwDB+LhXe/4Y0cOXJV2Qen0p3Krl5I5QFiuGfFNHPHsBJ6WRMNie8J/rvdOriVZlYmevzDxbeuvsdrXqLRIiLazvK1B0+8NcGhInCkVLFw/Zvu7piCUkyh01AyVDB13Qau6M4l93usp5jQXcTLLxMhjJTnO1L2kwGUCekKgutLbUXLa0Ar8DHrD6Z2sw8iz2hVJUXjufYMwIDAQAB | |

knife4j: | |

enable: true | |

setting: | |

language: zh-CN | |

enableVersion: true | |

enableSearch: true | |

enableFooter: false | |

enableFooterCustom: true | |

footerCustomContent: Copyright 2020-[深圳市租享生活科技有限公司](https://www.qiaozuji.com) | |

basic: | |

enable: false |

# 2.4.7 nf-flms-admin-prd.yml

spring: | |

security: | |

user: | |

name: admin | |

password: 4Q3NGIqsnU3Arwg9 | |

boot: | |

admin: | |

ui: | |

title: '俏租机 服务状态监控' | |

brand: '俏租机 服务状态监控' |

# 2.4.8 nf-flms-file-prd.yml

spring: | |

datasource: | |

url: jdbc:mysql://mysql-nf-flms-0.mysql-nf-flms-svc.prod.svc.cluster.local:3306/nf-flms?serverTimezone=Asia/Shanghai&useUnicode=true&characterEncoding=utf8&useSSL=false | |

username: root | |

password: Superman*2023 | |

swagger: | |

enabled: false | |

title: 文件服务接口 | |

description: 文件服务接口 | |

version: 1.0.0.SNAPSHOT | |

base-package: com.timedigit | |

authorization: | |

name: Authorization | |

key-name: Authorization | |

auth-regex: ^.*$ | |

prometheus: | |

enabled: false | |

project-enviroment: ${spring.profiles.active} | |

project-name: ${spring.application.name} | |

timedigit: | |

job: | |

adminAddresses: http://192.168.1.70:30959/xxl-job-admin/ | |

appName: nf-flms-statistics | |

ip: | |

port: 9996 | |

logPath: /logs/jobhandler | |

accessToken: | |

logRetentionDays: 5 | |

knife4j: | |

enable: true | |

setting: | |

language: zh-CN | |

enableVersion: true | |

enableSearch: true | |

enableFooter: false | |

enableFooterCustom: true | |

footerCustomContent: Copyright 2020-[深圳市租享生活科技有限公司](https://www.qiaozuji.com) | |

basic: | |

enable: false | |

# 档案 | |

document: | |

path: /data/ |

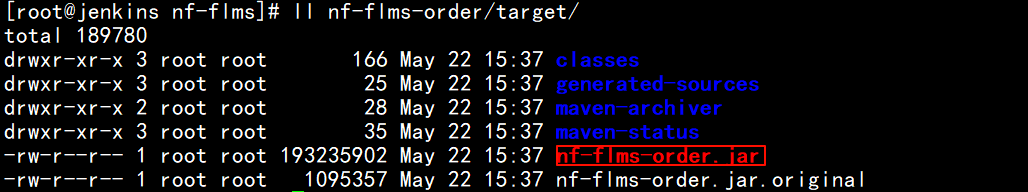

# 2.5 构建镜像

# 2.5.1 构建 nf-flms-order

# vim Dockerfile | |

[root@jenkins nf-flms-order]# cat Dockerfile | |

FROM openjdk:8-jre | |

VOLUME /tmp | |

COPY target/nf-flms-order.jar nf-flms-order.jar | |

EXPOSE 8080 | |

CMD java $JAVA_OPTS -Djava.security.egd=file:/dev/./urandom -jar nf-flms-order.jar | |

# pwd | |

/root/qzj-system-back/nf-flms/nf-flms-order | |

# docker build -t registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-order:v2.0 . | |

# docker login --username=xyapples@163.com registry.cn-hangzhou.aliyuncs.com | |

# docker push registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-order:v2.0 |

# 2.5.2 构建 nf-flms-statistics

# cat Dockerfile | |

FROM openjdk:8-jre | |

VOLUME /tmp | |

COPY target/nf-flms-statistics.jar nf-flms-statistics.jar | |

EXPOSE 8080 | |

CMD java $JAVA_OPTS -Djava.security.egd=file:/dev/./urandom -jar nf-flms-statistics.jar | |

# pwd | |

/root/qzj-system-back/nf-flms/nf-flms-statistics | |

# docker build -t registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-statistics:v1 . | |

# docker login --username=xyapples@163.com registry.cn-hangzhou.aliyuncs.com | |

# docker push registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-statistics:v1 |

# 2.5.3 构建 nf-flms-system

# cat Dockerfile | |

FROM openjdk:8-jre | |

VOLUME /tmp | |

COPY target/nf-flms-system.jar nf-flms-system.jar | |

EXPOSE 30011 | |

CMD java $JAVA_OPTS -Djava.security.egd=file:/dev/./urandom -jar nf-flms-system.jar | |

# pwd | |

/root/qzj-system-back/nf-flms/nf-flms-system | |

# docker build -t registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-system:v1 . | |

# docker login --username=xyapples@163.com registry.cn-hangzhou.aliyuncs.com | |

# docker push registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-system:v1 |

# 2.5.4 构建 nf-flms-openapi

# cat Dockerfile | |

FROM openjdk:8-jre | |

VOLUME /tmp | |

COPY target/nf-flms-openapi.jar nf-flms-openapi.jar | |

EXPOSE 30022 | |

CMD java $JAVA_OPTS -Djava.security.egd=file:/dev/./urandom -jar nf-flms-openapi.jar | |

# pwd | |

/root/qzj-system-back/nf-flms/nf-flms-openapi | |

# docker build -t registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-openapi:v1 . | |

# docker login --username=xyapples@163.com registry.cn-hangzhou.aliyuncs.com | |

# docker push registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-openapi:v1 |

# 2.5.5 构建 nf-flms-gateway

# cat Dockerfile | |

FROM openjdk:8-jre | |

VOLUME /tmp | |

COPY target/nf-flms-gateway.jar nf-flms-gateway.jar | |

EXPOSE 8080 | |

CMD java $JAVA_OPTS -Djava.security.egd=file:/dev/./urandom -jar nf-flms-gateway.jar | |

# pwd | |

/root/qzj-system-back/nf-flms/nf-flms-gateway | |

# docker build -t registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-gateway:v2 . | |

# docker login --username=xyapples@163.com registry.cn-hangzhou.aliyuncs.com | |

# docker push registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-gateway:v2 |

# 2.5.6 构建 nf-flms-file

# cat Dockerfile | |

FROM openjdk:8-jre | |

VOLUME /tmp | |

COPY target/nf-flms-file.jar nf-flms-file.jar | |

EXPOSE 30017 | |

CMD java $JAVA_OPTS -Djava.security.egd=file:/dev/./urandom -jar nf-flms-file.jar | |

# pwd | |

/root/qzj-system-back/nf-flms/nf-flms-file | |

# docker build -t registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-file:v1 . | |

# docker login --username=xyapples@163.com registry.cn-hangzhou.aliyuncs.com | |

# docker push registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-file:v1 |

# 2.5.7 构建 nf-flms-admin

# cat Dockerfile | |

FROM openjdk:8-jre | |

VOLUME /tmp | |

COPY target/nf-flms-admin.jar nf-flms-admin.jar | |

EXPOSE 30013 | |

CMD java $JAVA_OPTS -Djava.security.egd=file:/dev/./urandom -jar nf-flms-admin.jar | |

# pwd | |

/root/qzj-system-back/nf-flms/nf-flms-admin | |

# docker build -t registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-admin:v1 . | |

# docker login --username=xyapples@163.com registry.cn-hangzhou.aliyuncs.com | |

# docker push registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-admin:v1 |

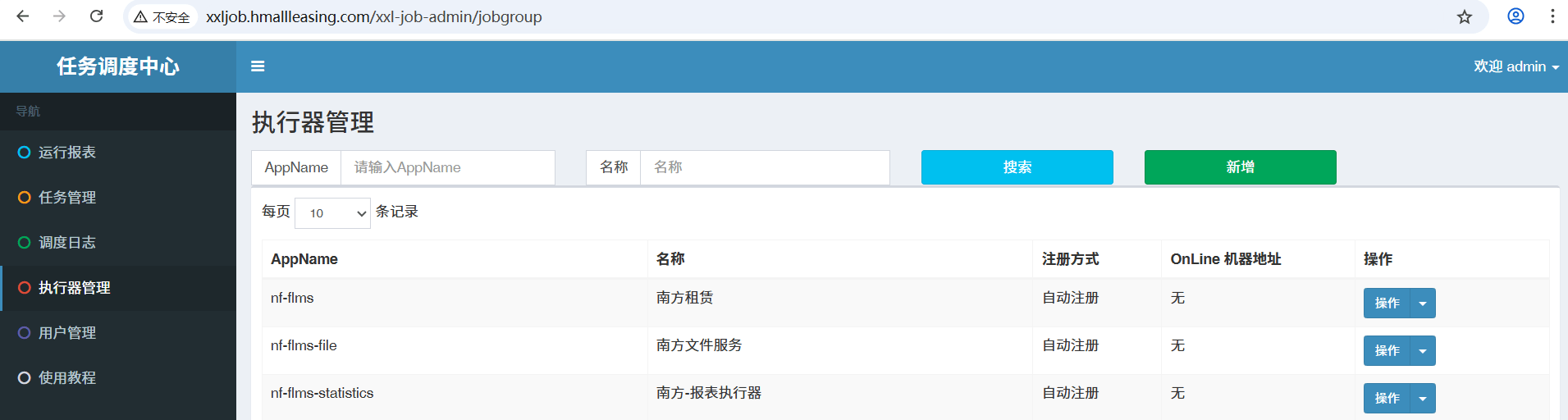

# 2.6 配置 XXL-JOB 执行器

# 三、部署微服务应用

# 3.1 创建 secret

# sed -i "s#dev#prod#g" *.yaml | |

# kubectl create secret tls prod-api.hmallleasig.com --key hmallleasing.com.key --cert hmallleasing.com.pem -n prod | |

# kubectl create secret docker-registry harbor-admin --docker-server=registry.cn-hangzhou.aliyuncs.com --docker-username=xyapples@163.com --docker-password=passwd -n prod |

# 3.2 创建 nf-flms-gateway

# kubectl apply -f 01-nf-flms-gateway.yaml | |

deployment.apps/nf-flms-gateway created | |

service/gateway-svc created | |

ingress.networking.k8s.io/gateway-ingress created | |

[root@k8s-master01 06-service-all]# cat 01-nf-flms-gateway.yaml | |

apiVersion: apps/v1 | |

kind: Deployment | |

metadata: | |

name: nf-flms-gateway | |

namespace: prod | |

spec: | |

replicas: 2 | |

selector: | |

matchLabels: | |

app: nf-flms-gateway | |

template: | |

metadata: | |

labels: | |

app: nf-flms-gateway | |

spec: | |

imagePullSecrets: | |

- name: harbor-admin | |

containers: | |

- name: nf-flms-gateway | |

image: registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-gateway:v2.2 | |

command: | |

- "/bin/sh" | |

- "-c" | |

- "java -Xms256m -Xmx1024m -Dspring.profiles.active=prd -Djava.security.egd=file:/dev/./urandom -jar -Duser.timezone=GMT+08 nf-flms-gateway.jar" | |

resources: | |

limits: | |

cpu: '1000m' | |

memory: 1Gi | |

requests: | |

cpu: "200m" | |

memory: "500Mi" | |

ports: | |

- containerPort: 8080 | |

readinessProbe: # 就绪探针,不就绪则从负载均衡移除 | |

tcpSocket: | |

port: 8080 | |

initialDelaySeconds: 60 | |

periodSeconds: 30 | |

timeoutSeconds: 3 | |

successThreshold: 1 | |

failureThreshold: 2 | |

livenessProbe: # 存活探针,不存活会重启 | |

tcpSocket: | |

port: 8080 | |

initialDelaySeconds: 60 | |

periodSeconds: 30 | |

timeoutSeconds: 3 | |

successThreshold: 1 | |

failureThreshold: 2 | |

volumeMounts: | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

volumes: | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

--- | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

name: gateway-svc | |

namespace: prod | |

spec: | |

selector: | |

app: nf-flms-gateway | |

ports: | |

- port: 8080 | |

targetPort: 8080 | |

--- | |

apiVersion: networking.k8s.io/v1 | |

kind: Ingress | |

metadata: | |

name: gateway-ingress | |

namespace: prod | |

annotations: | |

nginx.ingress.kubernetes.io/ssl-redirect: "false" #禁用 https 强制跳转 | |

spec: | |

ingressClassName: "nginx" | |

rules: | |

- host: "prod-api.hmallleasing.com" | |

http: | |

paths: | |

- path: / | |

pathType: Prefix | |

backend: | |

service: | |

name: gateway-svc | |

port: | |

number: 8080 | |

tls: #https | |

- hosts: | |

- prod-api.hmallleasing.com | |

secretName: "prod-api.hmallleasig.com" #配置默认证书可不添加 secretName |

# 3.3 创建 nf-flms-statistics

# cat 03-nf-flms-statistics.yaml | |

apiVersion: apps/v1 | |

kind: Deployment | |

metadata: | |

name: nf-flms-statistics | |

namespace: prod | |

spec: | |

replicas: 2 | |

selector: | |

matchLabels: | |

app: nf-flms-statistics | |

template: | |

metadata: | |

labels: | |

app: nf-flms-statistics | |

spec: | |

imagePullSecrets: | |

- name: harbor-admin | |

containers: | |

- name: nf-flms-statistics | |

image: registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-statistics:v2.0 | |

command: | |

- "/bin/sh" | |

- "-c" | |

- "java -Xms256m -Xmx1024m -Dspring.profiles.active=prd -Djava.security.egd=file:/dev/./urandom -jar -Duser.timezone=GMT+08 nf-flms-statistics.jar" | |

resources: | |

limits: | |

cpu: '1000m' | |

memory: 1Gi | |

requests: | |

cpu: "200m" | |

memory: "500Mi" | |

ports: | |

- containerPort: 8080 | |

readinessProbe: # 就绪探针,不就绪则从负载均衡移除 | |

tcpSocket: | |

port: 8080 | |

initialDelaySeconds: 60 | |

periodSeconds: 30 | |

timeoutSeconds: 3 | |

successThreshold: 1 | |

failureThreshold: 2 | |

livenessProbe: # 存活探针,不存活会重启 | |

tcpSocket: | |

port: 8080 | |

initialDelaySeconds: 60 | |

periodSeconds: 30 | |

timeoutSeconds: 3 | |

successThreshold: 1 | |

failureThreshold: 2 | |

volumeMounts: | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

volumes: | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" |

# 3.4 创建 nf-flms-order

# 3.4.1 创建 PVC

# cat 02-data-image.yaml | |

apiVersion: v1 | |

kind: PersistentVolumeClaim | |

metadata: | |

name: data-image | |

namespace: prod | |

spec: | |

storageClassName: "nfs-storage" # 明确指定使用哪个 sc 的供应商来创建 pv | |

accessModes: | |

- ReadWriteMany | |

resources: | |

requests: | |

storage: 2Gi # 根据业务实际大小进行资源申请 |

# 3.4.2 创建 nf-flms-order

# cat 02-nf-flms-order.yaml | |

apiVersion: apps/v1 | |

kind: Deployment | |

metadata: | |

name: nf-flms-order | |

namespace: prod | |

spec: | |

replicas: 2 | |

selector: | |

matchLabels: | |

app: nf-flms-order | |

template: | |

metadata: | |

labels: | |

app: nf-flms-order | |

spec: | |

imagePullSecrets: | |

- name: harbor-admin | |

containers: | |

- name: nf-flms-order | |

image: registry.cn-hangzhou.aliyuncs.com/kubernetes_public/nf-flms-order:v2.0 | |

command: | |

- "/bin/sh" | |

- "-c" | |

- "java -Xms256m -Xmx1024m -Dspring.profiles.active=prd -Djava.security.egd=file:/dev/./urandom -jar -Duser.timezone=GMT+08 nf-flms-order.jar" | |

resources: | |

limits: | |

cpu: '1000m' | |

memory: 1Gi | |

requests: | |

cpu: "200m" | |

memory: "500Mi" | |

ports: | |

- containerPort: 8080 | |

readinessProbe: # 就绪探针,不就绪则从负载均衡移除 | |

tcpSocket: | |

port: 8080 | |

initialDelaySeconds: 60 | |

periodSeconds: 30 | |

timeoutSeconds: 3 | |

successThreshold: 1 | |

failureThreshold: 2 | |

livenessProbe: # 存活探针,不存活会重启 | |

tcpSocket: | |

port: 8080 | |

initialDelaySeconds: 60 | |

periodSeconds: 30 | |

timeoutSeconds: 3 | |

successThreshold: 1 | |

failureThreshold: 2 | |

volumeMounts: | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

- name: data-image | |

mountPath: /data | |

volumes: | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

- name: data-image | |

persistentVolumeClaim: | |

claimName: data-image |