# Kubenetes 部署 Rabbitmq 集群

# 1. 创建 RBAC 权限

# cat 01-rabbitmq-rbac.yaml | |

apiVersion: v1 | |

kind: ServiceAccount | |

metadata: | |

name: rabbitmq-cluster | |

namespace: prod | |

--- | |

apiVersion: rbac.authorization.k8s.io/v1 | |

kind: Role | |

metadata: | |

name: rabbitmq-cluster | |

namespace: prod | |

rules: | |

- apiGroups: [""] | |

resources: ["endpoints"] | |

verbs: ["get"] | |

--- | |

kind: RoleBinding | |

apiVersion: rbac.authorization.k8s.io/v1 | |

metadata: | |

name: rabbitmq-cluster | |

namespace: prod | |

roleRef: | |

apiGroup: rbac.authorization.k8s.io | |

kind: Role | |

name: rabbitmq-cluster | |

subjects: | |

- kind: ServiceAccount | |

name: rabbitmq-cluster | |

namespace: prod |

# 2. 创建集群的 Secret

# cat 02-rabbitmq-secret.yaml | |

apiVersion: v1 | |

kind: Secret | |

metadata: | |

name: rabbitmq-secret | |

namespace: prod | |

stringData: | |

password: talent | |

url: amqp://RABBITMQ_USER:RABBITMQ_PASS@rmq-cluster-balancer | |

username: superman | |

type: Opaque |

# 3. 创建 ConfigMap

# cat 03-rabbitmq-cm.yaml | |

apiVersion: v1 | |

kind: ConfigMap | |

metadata: | |

name: rabbitmq-cluster-config | |

namespace: prod | |

labels: | |

addonmanager.kubernetes.io/mode: Reconcile | |

data: | |

enabled_plugins: | | |

[rabbitmq_management,rabbitmq_peer_discovery_k8s]. | |

rabbitmq.conf: | | |

loopback_users.guest = false | |

default_user = RABBITMQ_USER | |

default_pass = RABBITMQ_PASS | |

## Cluster | |

cluster_formation.peer_discovery_backend = rabbit_peer_discovery_k8s | |

cluster_formation.k8s.host = kubernetes.default.svc | |

#cluster_formation.k8s.host = kubernetes.default.svc.cluster.local | |

cluster_formation.k8s.address_type = hostname | |

################################################# | |

# prod is rabbitmq-cluster's namespace# | |

################################################# | |

cluster_formation.k8s.hostname_suffix = .rabbitmq-cluster.prod.svc.cluster.local | |

cluster_formation.node_cleanup.interval = 30 | |

cluster_formation.node_cleanup.only_log_warning = true | |

cluster_partition_handling = autoheal | |

## queue master locator | |

queue_master_locator = min-masters | |

cluster_formation.randomized_startup_delay_range.min = 0 | |

cluster_formation.randomized_startup_delay_range.max = 2 | |

# memory | |

vm_memory_high_watermark.absolute = 100MB | |

# disk | |

disk_free_limit.absolute = 2GB |

注:配置文件 cluster_formation.k8s.host 设置为 kubernetes.default.svc.cluster.local,然后就是各种连不上,后来换上 kubernetes.default.svc 就可以了,不知道是不是 k8s 新版本的问题。

# 4. 创建集群的 svc

# cat 04-rabbitmq-cluster-svc.yaml | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

labels: | |

app: rabbitmq-cluster | |

name: rabbitmq-cluster | |

namespace: prod | |

spec: | |

clusterIP: None | |

ports: | |

- name: rmqport | |

port: 5672 | |

targetPort: 5672 | |

- name: http | |

port: 15672 | |

protocol: TCP | |

targetPort: 15672 | |

selector: | |

app: rabbitmq-cluster | |

--- | |

apiVersion: v1 | |

kind: Service | |

metadata: | |

labels: | |

app: rabbitmq-cluster-balancer | |

name: rabbitmq-cluster-balancer | |

namespace: prod | |

spec: | |

ports: | |

- name: rmqport | |

port: 5672 | |

targetPort: 5672 | |

- name: http | |

port: 15672 | |

protocol: TCP | |

targetPort: 15672 | |

selector: | |

app: rabbitmq-cluster | |

type: NodePort |

# 5. 创建 StatefulSet

# cat 05-rabbitmq-cluster-sts.yaml | |

apiVersion: apps/v1 | |

kind: StatefulSet | |

metadata: | |

labels: | |

app: rabbitmq-cluster | |

name: rabbitmq-cluster | |

namespace: prod | |

spec: | |

replicas: 3 | |

selector: | |

matchLabels: | |

app: rabbitmq-cluster | |

serviceName: rabbitmq-cluster | |

template: | |

metadata: | |

labels: | |

app: rabbitmq-cluster | |

spec: | |

affinity: # 避免 Pod 运行到同一个节点上了 | |

podAntiAffinity: | |

requiredDuringSchedulingIgnoredDuringExecution: | |

- labelSelector: | |

matchExpressions: | |

- key: app | |

operator: In | |

values: ["rabbitmq-cluster"] | |

topologyKey: "kubernetes.io/hostname" | |

containers: | |

- args: | |

- -c | |

- cp -v /etc/rabbitmq/rabbitmq.conf ${RABBITMQ_CONFIG_FILE}; exec docker-entrypoint.sh rabbitmq-server | |

command: | |

- sh | |

env: | |

- name: RABBITMQ_DEFAULT_USER | |

valueFrom: | |

secretKeyRef: | |

key: username | |

name: rabbitmq-secret | |

- name: RABBITMQ_DEFAULT_PASS | |

valueFrom: | |

secretKeyRef: | |

key: password | |

name: rabbitmq-secret | |

- name: TZ | |

value: 'Asia/Shanghai' | |

- name: RABBITMQ_ERLANG_COOKIE | |

value: 'SWvCP0Hrqv43NG7GybHC95ntCJKoW8UyNFWnBEWG8TY=' | |

- name: K8S_SERVICE_NAME | |

value: rabbitmq-cluster | |

- name: POD_IP | |

valueFrom: | |

fieldRef: | |

fieldPath: status.podIP | |

- name: POD_NAME | |

valueFrom: | |

fieldRef: | |

fieldPath: metadata.name | |

- name: POD_NAMESPACE | |

valueFrom: | |

fieldRef: | |

fieldPath: metadata.namespace | |

- name: RABBITMQ_USE_LONGNAME | |

value: "true" | |

- name: RABBITMQ_NODENAME | |

value: rabbit@$(POD_NAME).$(K8S_SERVICE_NAME).$(POD_NAMESPACE).svc.cluster.local | |

- name: RABBITMQ_CONFIG_FILE | |

value: /var/lib/rabbitmq/rabbitmq.conf | |

image: rabbitmq:3.9-management | |

imagePullPolicy: IfNotPresent | |

name: rabbitmq | |

ports: | |

- containerPort: 15672 | |

name: http | |

protocol: TCP | |

- containerPort: 5672 | |

name: amqp | |

protocol: TCP | |

livenessProbe: | |

exec: | |

command: ["rabbitmq-diagnostics", "status"] | |

initialDelaySeconds: 60 | |

periodSeconds: 60 | |

failureThreshold: 2 | |

timeoutSeconds: 10 | |

readinessProbe: | |

exec: | |

command: ["rabbitmq-diagnostics", "status"] | |

failureThreshold: 2 | |

initialDelaySeconds: 60 | |

periodSeconds: 60 | |

timeoutSeconds: 10 | |

volumeMounts: | |

- mountPath: /etc/rabbitmq | |

name: config-volume | |

readOnly: false | |

- mountPath: /var/lib/rabbitmq | |

name: rabbitmq-storage | |

readOnly: false | |

- name: tz-config | |

mountPath: /usr/share/zoneinfo/Asia/Shanghai | |

- name: tz-config | |

mountPath: /etc/localtime | |

- name: timezone | |

mountPath: /etc/timezone | |

serviceAccountName: rabbitmq-cluster | |

terminationGracePeriodSeconds: 30 | |

volumes: | |

- name: config-volume | |

configMap: | |

items: | |

- key: rabbitmq.conf | |

path: rabbitmq.conf | |

- key: enabled_plugins | |

path: enabled_plugins | |

name: rabbitmq-cluster-config | |

- name: tz-config | |

hostPath: | |

path: /usr/share/zoneinfo/Asia/Shanghai | |

type: "" | |

- name: timezone | |

hostPath: | |

path: /etc/timezone | |

type: "" | |

volumeClaimTemplates: | |

- metadata: | |

name: rabbitmq-storage | |

spec: | |

accessModes: | |

- ReadWriteMany | |

storageClassName: "nfs-storage" | |

resources: | |

requests: | |

storage: 5Gi |

# 6. 创建 Ingress

[root@k8s-master01 04-rabbitmq]# cat 06-rabbitmq-ingress.yaml | |

apiVersion: networking.k8s.io/v1 | |

kind: Ingress | |

metadata: | |

name: rabbitmq-ingress | |

namespace: prod | |

spec: | |

ingressClassName: "nginx" | |

rules: | |

- host: rabbitmq.hmallleasing.com | |

http: | |

paths: | |

- backend: | |

service: | |

name: rabbitmq-cluster | |

port: | |

number: 15672 | |

path: / | |

pathType: ImplementationSpecific |

# 7. 更新资源清单

[root@k8s-master01 04-rabbitmq]# sed -i "s#dev#prod#g" *.yaml | |

[root@k8s-master01 04-rabbitmq]# kubectl apply -f . | |

[root@k8s-master01 04-rabbitmq]# kubectl get pods -n prod | |

NAME READY STATUS RESTARTS AGE | |

rabbitmq-cluster-0 1/1 Running 0 9m53s | |

rabbitmq-cluster-1 1/1 Running 0 8m47s | |

rabbitmq-cluster-2 1/1 Running 0 7m40s | |

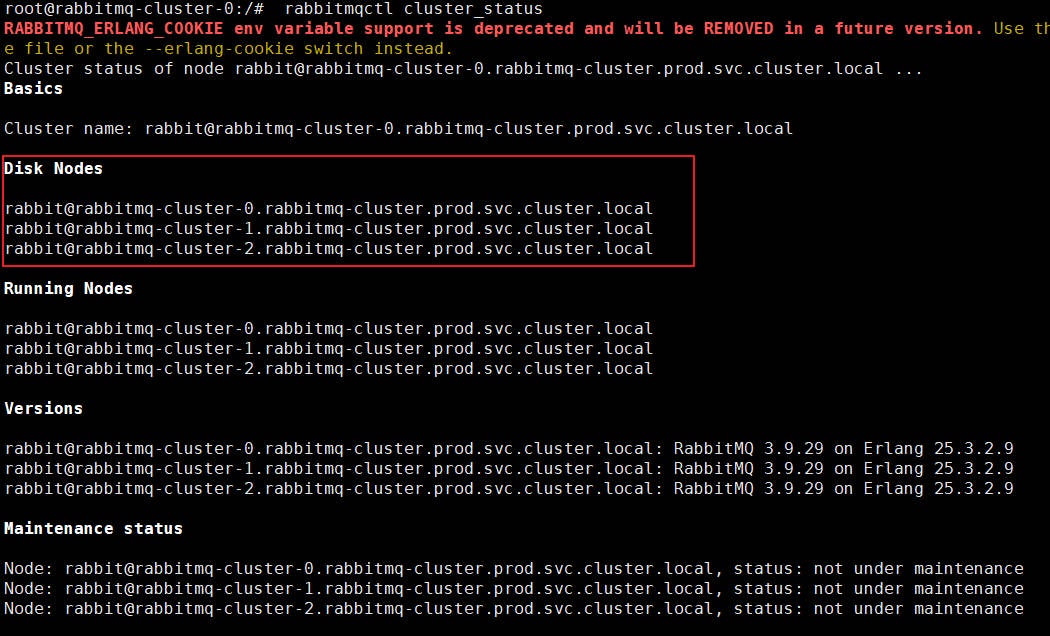

[root@k8s-master01 04-rabbitmq]# kubectl exec -it rabbitmq-cluster-0 -n prod -- /bin/bash | |

root@rabbitmq-cluster-0:/# rabbitmqctl cluster_status | |

RABBITMQ_ERLANG_COOKIE env variable support is deprecated and will be REMOVED in a future version. Use the $HOME/.erlang.cookie file or the --erlang-cookie switch instead. | |

Cluster status of node rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local ... | |

Basics | |

Cluster name: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local | |

Disk Nodes | |

rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local | |

rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local | |

rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local | |

Running Nodes | |

rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local | |

rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local | |

rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local | |

Versions | |

rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local: RabbitMQ 3.9.29 on Erlang 25.3.2.9 | |

rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local: RabbitMQ 3.9.29 on Erlang 25.3.2.9 | |

rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local: RabbitMQ 3.9.29 on Erlang 25.3.2.9 | |

Maintenance status | |

Node: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local, status: not under maintenance | |

Node: rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local, status: not under maintenance | |

Node: rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local, status: not under maintenance | |

Alarms | |

Memory alarm on node rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local | |

Memory alarm on node rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local | |

Memory alarm on node rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local | |

Network Partitions | |

(none) | |

Listeners | |

Node: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 15672, protocol: http, purpose: HTTP API | |

Node: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication | |

Node: rabbit@rabbitmq-cluster-0.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0 | |

Node: rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 15672, protocol: http, purpose: HTTP API | |

Node: rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication | |

Node: rabbit@rabbitmq-cluster-1.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0 | |

Node: rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 15672, protocol: http, purpose: HTTP API | |

Node: rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 25672, protocol: clustering, purpose: inter-node and CLI tool communication | |

Node: rabbit@rabbitmq-cluster-2.rabbitmq-cluster.prod.svc.cluster.local, interface: [::], port: 5672, protocol: amqp, purpose: AMQP 0-9-1 and AMQP 1.0 | |

Feature flags | |

Flag: drop_unroutable_metric, state: enabled | |

Flag: empty_basic_get_metric, state: enabled | |

Flag: implicit_default_bindings, state: enabled | |

Flag: maintenance_mode_status, state: enabled | |

Flag: quorum_queue, state: enabled | |

Flag: stream_queue, state: enabled | |

Flag: user_limits, state: enabled | |

Flag: virtual_host_metadata, state: enabled |

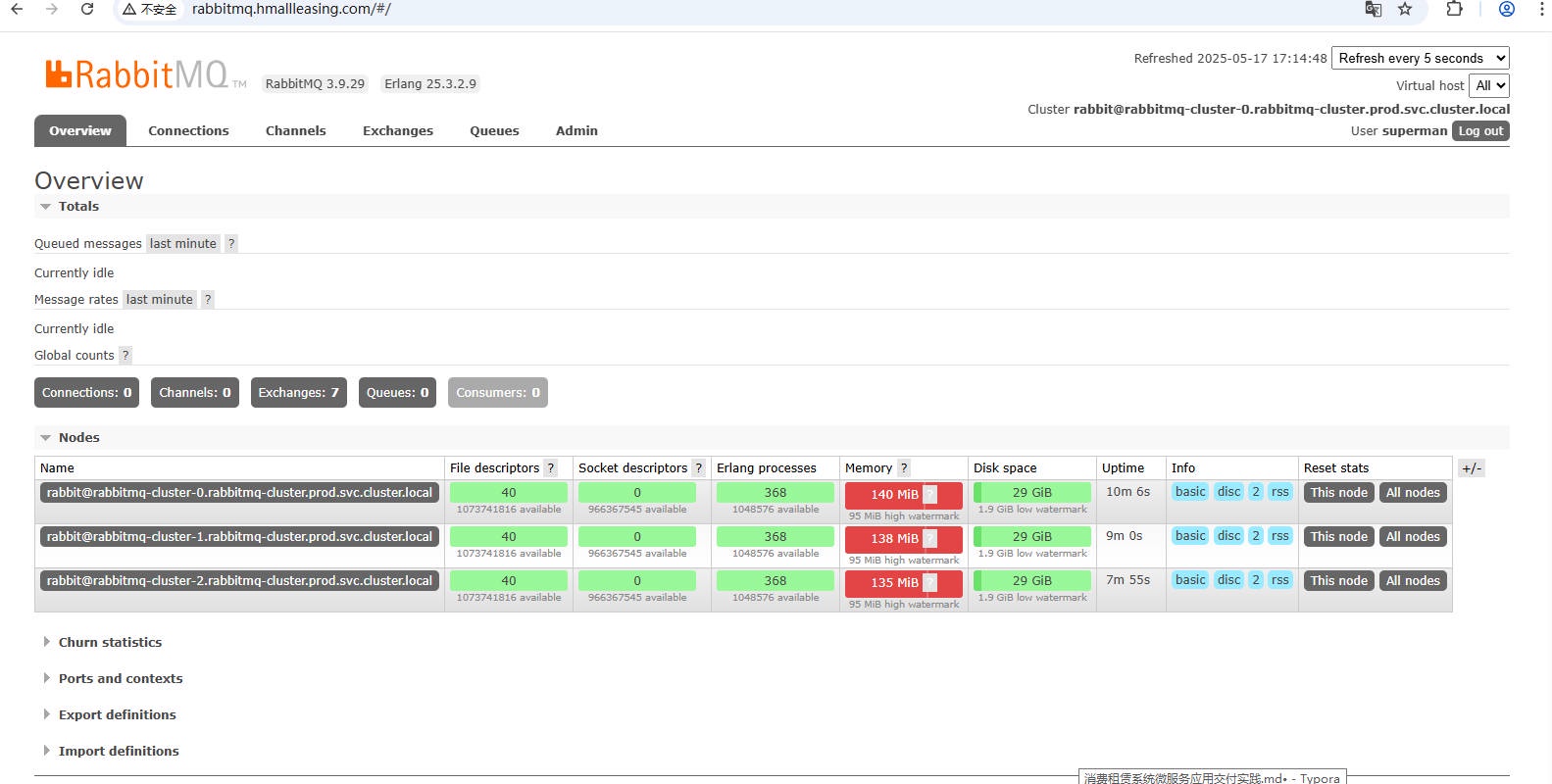

# 8. Web 访问 rabbitmq

http://rabbitmq.hmallleasing.com/#/ | |

user:superman | |

pwd:talent |

# 9. rabbitMQ 全部挂了,无法重启解决方案

Kubernetes 环境中,遇到 RabbitMQ 集群无法启动的问题。原因是 RabbitMQ 所有实例均失效,需要在每个 Pod 对应的持久化存储路径下创建 force_load 文件来强制启动。通过获取 PV 存储路径,在指定目录创建该文件后,重新启动 RabbitMQ 服务,成功解决了集群启动问题

[root@k8s-node02 ~# cd /data/dev-rabbitmq-storage-rabbitmq-cluster-0-pvc-3abca920-3c68-44eb-b0fd-406a4358b153/mnesia/rabbit@rabbitmq-cluster-0.rabbitmq-cluster.dev.svc.cluster.local | |

[root@k8s-node02 rabbit@rabbitmq-cluster-0.rabbitmq-cluster.dev.svc.cluster.local]# touch force_load |