# Ansible Playbook 入门(二)

# 1.Ansible 快速入门

# 1.1 什么是 Playbook

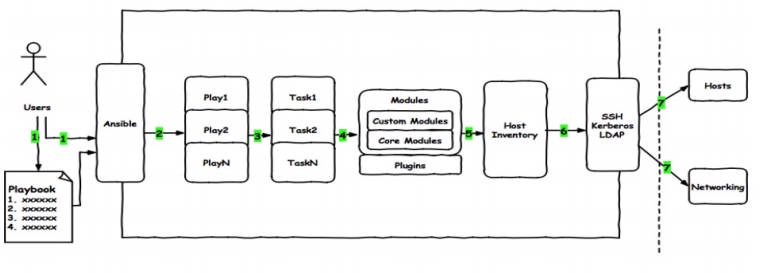

playbook 是一个 由 yml 语法编写的文本文件,它由 play 和 task 两部分组成。

play: 主要定义要操作主机或者主机组

task:主要定义对主机或主机组具体执行的任务,可以是一个任务,也可以是多个任务(模块)

总结: playbook 是由一个或多个 play 组成,一个 play 可以包含多个 task 任务。

可以理解为:使用多个不同的模块来共同完成一件事情。

# 1.2 Playbook 与 Ad-Hoc 区别

- playbook 是对 AD-Hoc 的一种编排方式。

- playbook 可以持久运行,而 Ad-Hoc 只能临时运行。

- playbook 适合复杂的任务,而 Ad-Hoc 适合做快速简单的任务。

- playbook 能控制任务执行的先后顺序。

# 1.3 Playbook 书写格式

playbook 是由 yml 语法书写,结构清晰,可读性强,所以必须掌握 yml 语法

| 语法 | 描述 |

|---|---|

| 缩进 | YAML 使用固定的缩进风格表示层级结构,每个缩进由两个空格组成,不能使用 tabs |

| 冒号 | 以冒号结尾的除外,其他所有冒号后面所有必须有空格。 |

| 短横线 | 表示列表项,使用一个短横杠加一个空格。多个项使用同样的缩进级别作为同一列表。 |

1. 下面我们一起来编写一个 playbook 文件,playbook 起步

- host: 对哪些主机进行操作

- remote_user: 我要使用什么用户执行

- tasks: 具体执行什么任务

[root@manager ~]# cat test_p1.yml | |

- hosts: webservers | |

tasks: | |

- name: Installed Nginx Server | |

yum: | |

name: nginx | |

state: present | |

- name: Systemd Nginx Server | |

systemd: | |

name: nginx | |

state: started | |

enabled: yes |

2. 执行 playbook,注意观察执行返回的状态颜色:

- 红色:表示有 task 执行失败,通常都会提示错误信息。

- 黄色:表示远程主机按照编排的任务执行且进行了改变。

- 绿色:表示该主机已经是描述后的状态,无需在次运行。

#1. 检查语法 | |

[root@manager ~]# ansible-playbook test_p1.yml --syntax-check | |

#2. 模拟执行 | |

[root@manager ~]# ansible-playbook -C test_p1.yml | |

#3. 执行 playbook | |

[root@manager ~]# ansible-playbook test_p1.yml | |

PLAY [webservers] ***************************************************************************************************************** | |

TASK [Gathering Facts] ************************************************************************************************************ | |

ok: [172.16.1.8] | |

ok: [172.16.1.7] | |

TASK [Installed Nginx Server] ***************************************************************************************************** | |

ok: [172.16.1.7] | |

ok: [172.16.1.8] | |

TASK [Systemd Nginx Server] ******************************************************************************************************* | |

ok: [172.16.1.7] | |

ok: [172.16.1.8] | |

PLAY RECAP ************************************************************************************************************************ | |

172.16.1.7 : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 | |

172.16.1.8 : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 |

# 2.Playbook 案例实战

# 2.1 Ansible 部署 NFS 示例

1. 编写安装配置 nfs 服务的 playbook 文件

[root@manager ~]# cat install_nfs_server.yml | |

- hosts: webservers | |

tasks: | |

- name: 1.Installed NFS Server | |

yum: | |

name: nfs-utils | |

state: present | |

- name: 2.Configure NFS Server | |

copy: | |

src: ./exports.j2 | |

dest: /etc/exports | |

notify: Restart NFS Server | |

- name: 3.Init Group | |

group: | |

name: www | |

gid: 666 | |

- name: 4.Init User | |

user: | |

name: www | |

uid: 666 | |

group: www | |

shell: /sbin/nologin | |

create_home: no | |

- name: 5.Init Create Directory | |

file: | |

path: /data | |

state: directory | |

owner: www | |

group: www | |

mode: "0755" | |

- name: 6.Started NFS Server | |

systemd: | |

name: nfs | |

state: started | |

enabled: yes | |

handlers: | |

- name: Restart NFS Server | |

systemd: | |

name: nfs | |

state: restarted |

2. 准备 playbook 依赖的 exports.j2 文件

[root@manager ~]# echo "/data 172.16.1.0/24(rw,sync,all_squash,anonuid=666,anongid=666)" > exports.j2 |

3. 检查 playbook 语法

[root@manager ~]# ansible-playbook install_nfs_server.yml --syntax-check | |

[root@manager ~]# ansible-playbook -C install_nfs_server.yml |

4. 执行 playbook

[root@manager ~]# ansible-playbook install_nfs_server.yml |

5. 客户端执行命令测试

[root@manager ~]# showmount -e 172.16.1.7 | |

Export list for 172.16.1.7: | |

/data 172.16.1.0/24 | |

[root@manager ~]# showmount -e 172.16.1.8 | |

Export list for 172.16.1.8: | |

/data 172.16.1.0/24 |

# 2.2 Ansible 部署 Rsync 示例

# 2.2.1 Ansible 部署 Rsync 服务端

1. 编写安装配置 Rsync 服务端的 playbook 文件

[root@manager ~]# cat install_rsync_server.yml | |

- hosts: webservers | |

tasks: | |

- name: Installed Rsync Server | |

yum: | |

name: rsync | |

state: present | |

- name: Configure Rsync Server | |

copy: | |

src: ./rsyncd.conf.j2 | |

dest: /etc/rsyncd.conf | |

owner: root | |

group: root | |

notify: Restart Rsync Server | |

- name: Create Group | |

group: | |

name: www | |

gid: 666 | |

- name: Create User | |

user: | |

name: www | |

uid: 666 | |

group: www | |

shell: /sbin/nologin | |

create_home: no | |

- name: Init Create Directory | |

file: | |

path: /backup | |

state: directory | |

owner: www | |

group: www | |

mode: "0755" | |

recurse: yes | |

- name: Rsync Server Virtual User Passwd | |

copy: | |

content: "rsyncbackup:talent" | |

dest: /etc/rsync.passwd | |

owner: root | |

group: root | |

mode: "0600" | |

notify: Restart Rsync Server | |

- name: Started Rsync Server | |

systemd: | |

name: rsyncd | |

state: started | |

enabled: yes | |

handlers: | |

- name: Restart Rsync Server | |

systemd: | |

name: nfs | |

state: restarted |

2. 准备 playbook 依赖的 exports.j2 文件

[root@manager ~]# cat rsyncd.conf.j2 | |

uid = www | |

gid = www | |

port = 873 | |

fake super = yes | |

use chroot = no | |

max connections = 200 | |

timeout = 600 | |

#ignore errors | |

read only = false | |

list = false | |

auth users = rsync_backup | |

secrets file = /etc/rsync.passwd | |

log file = /var/log/rsyncd.log | |

##################################### | |

[backup] | |

path = /backup |

3. 检查 playbook 语法

[root@manager ~]# ansible-playbook install_rsync_server.yml --syntax-check | |

[root@manager ~]# ansible-playbook -C install_rsync_server.yml |

4. 执行 playbook

[root@manager ~]# ansible-playbook install_rsync_server.yml |

5. 测试 Rsync

[root@manager ~]# rsync -avz install_rsync_server.yml rsync_backup@172.16.1.7::backup |

# 2.2.2 Ansible 部署 Rsync 客户端

1. 编写安装配置 Rsync 客户端的 playbook 文件

[root@manager ~]# cat rsync_client.yml | |

- hosts: localhost | |

tasks: | |

- name: Create Scripts Path | |

file: | |

path: /scripts | |

owner: root | |

group: root | |

mode: 0755 | |

- name: Push Scripts /scripts | |

copy: | |

src: client_push_data.sh | |

dest: /scripts/client_push_data.sh | |

group: root | |

owner: root | |

mode: 0755 | |

- name: Configure Contable Job | |

cron: | |

name: "Push Backup Data Remote Rsync Server" | |

minute: "0" | |

hour: "3" | |

job: "/bin/bash/scripts/client_push_data.sh &>/dev/null" |

2. 准备 playbook 依赖的 exports.j2 文件

[root@manager ~]# cat client_push_data.sh | |

#!/bin/bash | |

export PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin | |

#1、定义变量 | |

Host=$(hostname) | |

Ip=$(ifconfig ens192 | awk 'NR==2{print $2}') | |

Date=$(date +%F) | |

BackupDir=/backup/mysql | |

Dest=${BackupDir}/${Host}_${Ip}_${Date} | |

FILE_NAME=mysql_backup_`date '+%Y%m%d%H%M%S'`; | |

OLDBINLOG=/var/lib/mysql/oldbinlog | |

#2、创建备份目录 | |

if [ ! -d $Dest ];then | |

mkdir -p $Dest | |

fi | |

#3、备份目录 | |

/usr/bin/mysqldump -u'root' -p'your passwd' nf_flms > $Dest/nf-flms_${FILE_NAME}.sql | |

tar -czvf $Dest/${FILE_NAME}.tar.gz $Dest/nf-flms_${FILE_NAME}.sql | |

rm -rf $Dest/*${FILE_NAME}.sql | |

echo "Your database backup successfully" | |

#4、校验 | |

md5sum $Dest/* >$Dest/backup_check_$Date | |

#5、将备份目录推动到 rsync 服务端 | |

Rsync_Ip=192.168.1.145 | |

Rsync_user=rsync_backup | |

Rsync_Module=backup_mysql | |

export RSYNC_PASSWORD=your passwd | |

rsync -avz $Dest $Rsync_user@$Rsync_Ip::$Rsync_Module | |

#6、删除 15 天备份目录 | |

find $Dest -type d -mtime +15 | xargs rm -rf | |

echo "remove file successfully" | |

[root@db01 ~]# chmod +x /scripts/etc_backup.sh | |

[root@db01 ~]# crontab -e | |

00 03 * * * /bin/bash /scripts/mysql_backup.sh &> /dev/null |

3. 检查 playbook 语法

[root@manager ~]# ansible-playbook rsync_client.yml --syntax-check | |

[root@manager ~]# ansible-playbook -C rsync_client.yml |

4. 执行 playbook

[root@manager ~]# ansible-playbook rsync_client.yml |

5. 测试 Rsync

[root@manager ~]# crontab -l | |

#Ansible: Push Backup Data Remote Rsync Server | |

0 3 * * * /bin/bash/scripts/client_push_data.sh &>/dev/null | |

[root@manager ~]# ll /scripts/client_push_data.sh | |

-rwxr-xr-x 1 root root 1185 Oct 25 22:29 /scripts/client_push_data.sh |

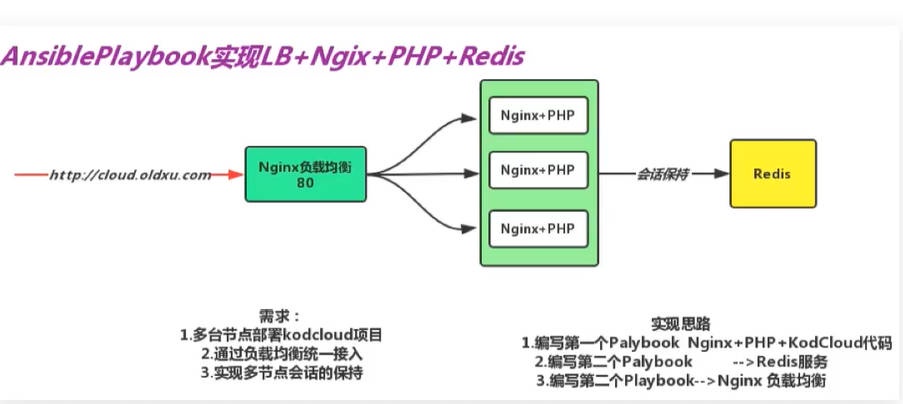

# 2.3 Playbook 部署集群架构

# 2.3.1 项目需求及规划

- 1. 使用多台节点部署 phpmyadmin

- 2. 使用 Nginx 作为负载均衡统一调度

- 3. 使用 Redis 实现多台节点会话保持

# 2.3.2 项目环境准备

#1. 免密登录 | |

[root@manager web_cluster]# ssh-keygen | |

[root@manager web_cluster]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@172.16.1.41 | |

[root@manager web_cluster]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@172.16.1.7 | |

[root@manager web_cluster]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@172.16.1.8 | |

[root@manager web_cluster]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@172.16.1.6 | |

[root@manager web_cluster]# ssh-copy-id -i ~/.ssh/id_rsa.pub root@172.16.1.5 | |

#2.Ansibel 配置文件 | |

[root@manager ~]# mkdir web_cluster | |

[root@manager web_cluster]# mkdir files | |

[root@manager web_cluster]# cp /etc/ansible/ansible.cfg ./ | |

[root@manager web_cluster]# cp /etc/ansible/hosts ./ | |

#3. 主机清单 | |

[root@manager web_cluster]# cat hosts | |

[webservers] | |

172.16.1.7 | |

172.16.1.8 | |

[dbservers] | |

172.16.1.41 | |

[lbservers] | |

172.16.1.5 | |

172.16.1.6 | |

#4. 测试连通性 | |

[root@manager web_cluster]# ansible all -m ping |

# 2.3.3 Ansible 部署 Rides 服务

1. 编写安装配置 redis 服务的 playbook 文件

[root@manager web_cluster]# cat redis_server.yml | |

- hosts: dbservers | |

tasks: | |

- name: Installed Redis Server | |

yum: | |

name: redis | |

state: present | |

- name: Configure Redis Server | |

copy: | |

src: ./files/redis.conf.j2 | |

dest: /etc/redis.conf | |

owner: redis | |

group: root | |

mode: 0640 | |

notify: Restart Redis Server | |

- name: Systemd Redis Server | |

systemd: | |

name: redis | |

state: started | |

enabled: yes | |

handlers: | |

- name: Restart Redis Server | |

systemd: | |

name: redis | |

state: restarted |

2. 准备 playbook 依赖的 redis.conf.j2 文件

[root@manager web_cluster]# cp /etc/redis.conf /root/web_cluster/files/redis.conf.j2 | |

[root@manager files]# sed -i "/^bind 127.0.0.1/c bind 127.0.0.1 192.168.40.41" redis.conf.j2 |

3. 检查 playbook 语法

[root@manager web_cluster]# ansible-playbook redis_server.yml --syntax-check | |

[root@manager web_cluster]# ansible-playbook -C redis_server.yml |

4. 执行 playbook

[root@manager web_cluster]# ansible-playbook redis_server.yml |

5. 客户端执行命令测试

[root@manager web_cluster]# redis-cli -h 192.168.40.41 |

# 2.3.4 Ansible 部署 Nginx+PHP 服务

1. 编写安装配置 Nginx_Php 服务的 playbook 文件

[root@manager web_cluster]# cat Nginx_Php_server.yml | |

- hosts: webservers | |

tasks: | |

- name: Installed Nginx Server | |

yum: | |

name: nginx | |

state: present | |

- name: Installed PHP Server | |

yum: | |

name: "" | |

vars: | |

pack: | |

- php71w-fpm | |

- php71w-gd | |

- php71w-mbstring | |

- php71w-mcrypt | |

- php71w-mysqlnd | |

- php71w-opcache | |

- php71w-pdo | |

- php71w-pear | |

- php71w-pecl-igbinary | |

- php71w-pecl-memcached | |

- mod_php71w | |

- php71w-pecl-mongodb | |

- php71w-pecl-redis | |

- php71w-cli | |

- php71w-process | |

- php71w-common | |

- php71w-xml | |

- php71w-devel | |

- php71w-embedded | |

- name: Configure Nginx Server | |

copy: | |

src: ./files/nginx.conf.j2 | |

dest: /etc/nginx/nginx.conf | |

owner: root | |

group: root | |

mode: 0644 | |

notify: Restart Nginx Server | |

- name: Create Group | |

group: | |

name: www | |

gid: 666 | |

- name: Create User | |

user: | |

name: www | |

uid: 666 | |

group: www | |

shell: /sbin/nologin | |

create_home: no | |

- name: Started Nginx Server | |

systemd: | |

name: nginx | |

state: started | |

enabled: yes | |

# PHP | |

- name: Configure PHP Server php.ini | |

copy: | |

src: ./files/php.ini.j2 | |

dest: /etc/php.ini | |

owner: root | |

group: root | |

mode: 0644 | |

notify: Restart PHP Server | |

- name: Configure PHP Server php-fpm.d/www.conf | |

copy: | |

src: ./files/www.conf.j2 | |

dest: /etc/php-fpm.d/www.conf | |

owner: root | |

group: root | |

mode: 0644 | |

notify: Restart PHP Server | |

- name: Systemd PHP Server | |

systemd: | |

name: php-fpm | |

state: started | |

enabled: yes | |

# Code | |

- name: Copy Nginx Virtual Site | |

copy: | |

src: ./files/ansible.hmallleasing.com.conf.j2 | |

dest: /etc/nginx/conf.d/ansible.hmallleasing.com.conf | |

owner: root | |

group: root | |

mode: 0644 | |

notify: Restart Nginx Server | |

- name: Create Ansible Directory | |

file: | |

path: /ansible/ | |

state: directory | |

owner: www | |

group: www | |

mode: "0755" | |

recurse: yes | |

- name: Unarchive PHP Code | |

unarchive: | |

src: files/phpMyAdmin-4.8.4-all-languages.zip | |

dest: /ansible | |

creates: /ansible/phpMyAdmin-4.8.4-all-languages.zip/config.inc.php | |

- name: Create Link | |

file: | |

src: /ansible/phpMyAdmin-4.8.4-all-languages/ | |

dest: /ansible/phpmyadmin | |

state: link | |

- name: Change phpmyadmin Configure | |

copy: | |

src: ./files/config.inc.php.j2 | |

dest: /ansible/phpmyadmin/config.inc.php | |

handlers: | |

- name: Restart Nginx Server | |

systemd: | |

name: nginx | |

state: restarted | |

- name: Restart PHP Server | |

systemd: | |

name: php-fpm | |

state: restarted |

2. 准备 playbook 中 Nginx 依赖的 nginx.conf.j2、ansible.hmallleasing.com.conf.j2 文件

#1. 配置文件 nginx.conf.j2 | |

[root@manager web_cluster]# scp root@172.16.1.7:/etc/nginx/nginx.conf ./files/nginx.conf.j2 | |

[root@manager web_cluster]# cat ./files/nginx.conf.j2 | |

user www; | |

worker_processes auto; | |

error_log /var/log/nginx/error.log notice; | |

pid /var/run/nginx.pid; | |

events { | |

worker_connections 1024; | |

} | |

http { | |

include /etc/nginx/mime.types; | |

default_type application/octet-stream; | |

log_format main '$remote_addr - $remote_user [$time_local] "$request" ' | |

'$status $body_bytes_sent "$http_referer" ' | |

'"$http_user_agent" "$http_x_forwarded_for"'; | |

access_log /var/log/nginx/access.log main; | |

sendfile on; | |

#tcp_nopush on; | |

keepalive_timeout 65; | |

#gzip on; | |

include /etc/nginx/conf.d/*.conf; | |

} | |

#2. 配置文件 ansible.hmallleasing.com.conf.j2 | |

[root@manager web_cluster]# cat files/ansible.hmallleasing.com.conf.j2 | |

server { | |

server_name ansible.hmallleasing.com; | |

listen 80; | |

root /ansible/phpmyadmin; | |

location / { | |

index index.php index.html; | |

} | |

location ~ \.php$ { | |

fastcgi_pass 127.0.0.1:9000; | |

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; | |

# fastcgi_param HTTPS on; #支持前端用 https, 后端用 http | |

include fastcgi_params; | |

} | |

} |

3. 准备 playbook 中 PHP 依赖的 php.ini.j2、www .conf.j2 配置文件

[root@manager web_cluster]# scp root@172.16.1.7:/etc/php.ini ./files/php.ini.j2 | |

[root@manager web_cluster]# scp root@172.16.1.7:/etc/php-fpm.d/www.conf ./files/www.conf.j2 |

4、准备业务代码 phpMyAdmin

[root@manager files]# wget https://files.phpmyadmin.net/phpMyAdmin/4.8.4/phpMyAdmin-4.8.4-all-languages.zip |

5、准备 phpMyAdmin 连接数据库配置文件 config.inc.php.j2

[root@manager files]# mv config.inc.php config.inc.php.j2 |

6. 检查 playbook 语法

[root@manager web_cluster]# ansible-playbook Nginx_Php_server.yml --syntax-check | |

[root@manager web_cluster]# ansible-playbook -C Nginx_Php_server.yml |

7. 执行 playbook

[root@manager web_cluster]# ansible-playbook Nginx_Php_server.yml |

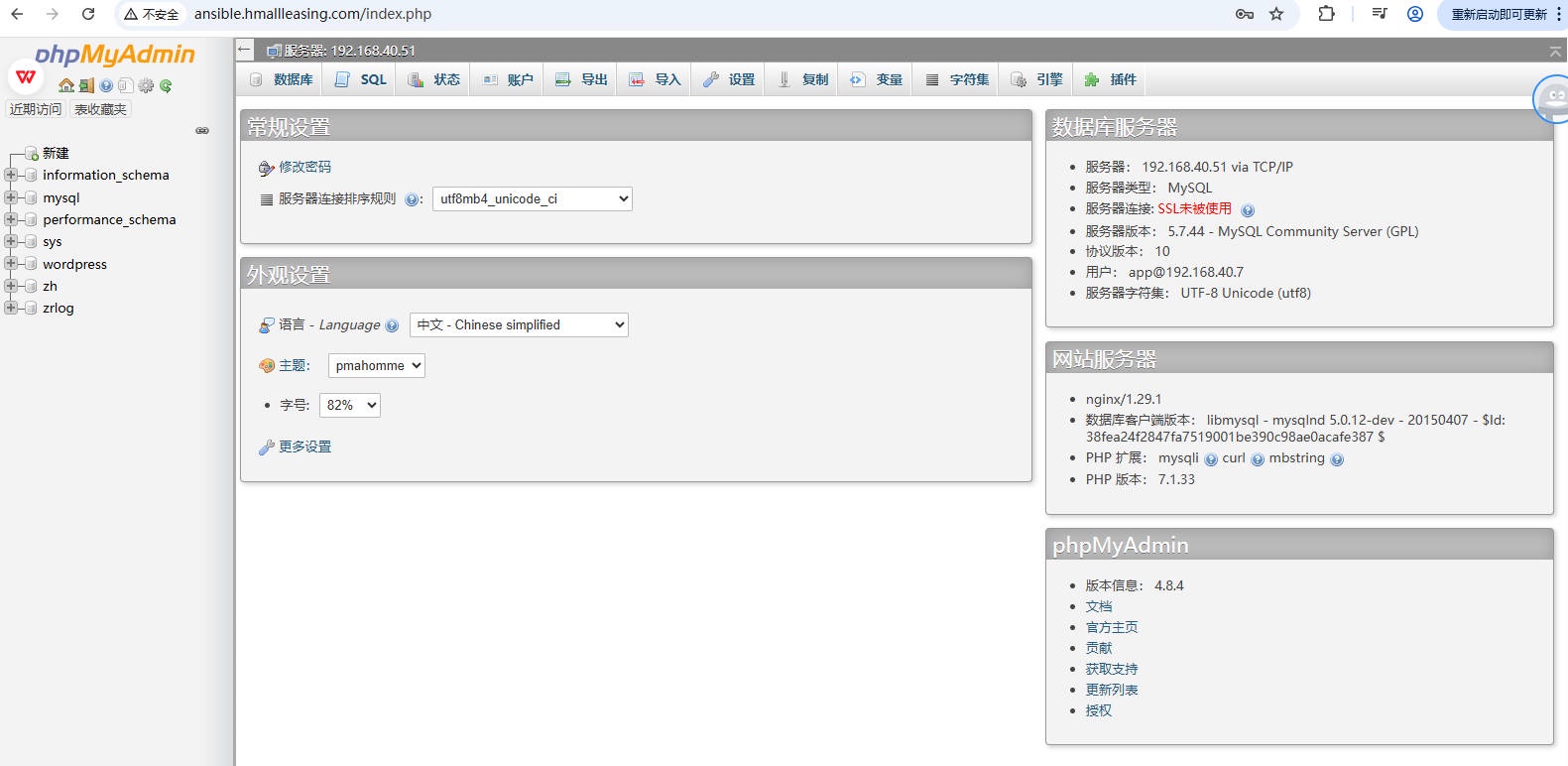

8. 访问测试

# 2.3.5 Ansible 部署 Nginx 负载均衡

1. 编写安装配置 Nginx 负载均衡的 playbook 文件

[root@manager web_cluster]# cat nginx_server_lb_http.yml | |

- hosts: lbservers | |

tasks: | |

- name: Installed Nginx Server | |

yum: | |

name: nginx | |

state: present | |

- name: Configure Nginx Server nginx.conf | |

copy: | |

src: ./files/nginx_lb.conf.j2 | |

dest: /etc/nginx/nginx.conf | |

notify: Restart Nginx Server | |

- name: Configure conf.d/proxy.conf | |

copy: | |

src: ./files/proxy_ansible.hmallleasing.com.conf.j2 | |

dest: /etc/nginx/conf.d/proxy_ansible.hmallleasing.com.conf | |

notify: Restart Nginx Server | |

- name: Started Nginx Server | |

systemd: | |

name: nginx | |

state: started | |

handlers: | |

- name: Restart Nginx Server | |

systemd: | |

name: nginx | |

state: restarted |

2. 准备 playbook 中 Nginx 依赖的 nginx_lb.conf.j2、proxy_ansible.hmallleasing.com.conf.j2 文件

#1. 配置文件 nginx_lb.conf.j2 | |

[root@manager web_cluster]# cat files/nginx_lb.conf.j2 | |

user nginx; | |

worker_processes auto; | |

error_log /var/log/nginx/error.log notice; | |

pid /var/run/nginx.pid; | |

events { | |

worker_connections 1024; | |

} | |

http { | |

include /etc/nginx/mime.types; | |

default_type application/octet-stream; | |

log_format main '$remote_addr - $remote_user [$time_local] "$request" ' | |

'$status $body_bytes_sent "$http_referer" ' | |

'"$http_user_agent" "$http_x_forwarded_for"'; | |

access_log /var/log/nginx/access.log main; | |

sendfile on; | |

#tcp_nopush on; | |

keepalive_timeout 65; | |

#gzip on; | |

include /etc/nginx/conf.d/*.conf; | |

} | |

#2. 配置文件 proxy_ansible.hmallleasing.com.conf.j2 | |

[root@manager web_cluster]# cat files/proxy_ansible.hmallleasing.com.conf.j2 | |

upstream ansible { | |

server 172.16.1.7:80; | |

server 172.16.1.8:80; | |

} | |

server { | |

listen 80; | |

server_name ansible.hmallleasing.com; | |

location / { | |

proxy_pass http://ansible; | |

proxy_set_header Host $http_host; | |

} | |

} |

3. 检查 playbook 语法

[root@manager web_cluster]# ansible-playbook nginx_server_lb_http.yml --syntax-check | |

[root@manager web_cluster]# ansible-playbook -C nginx_server_lb_http.yml |

4. 执行 playbook

[root@manager web_cluster]# ansible-playbook nginx_server_lb_http.yml |

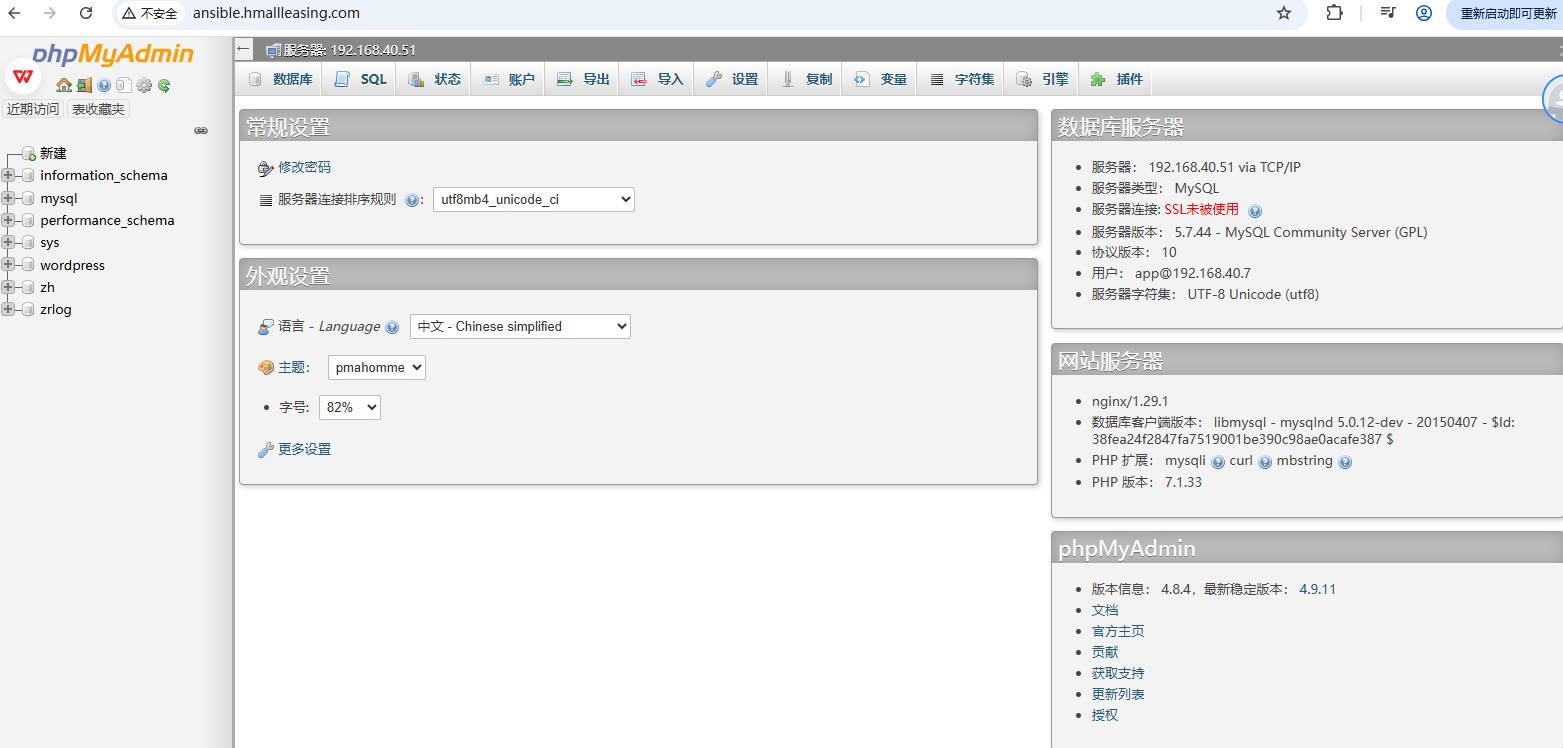

5. 访问测试

# 2.3.6 升级 Nginx 协议为 Https

1. 编写安装配置 Nginx 负载均衡的 playbook 文件

[root@manager web_cluster]# cat nginx_server_lb_https.yml | |

- hosts: lbservers | |

tasks: | |

- name: Installed Nginx Server | |

yum: | |

name: nginx | |

state: present | |

- name: Configure Nginx Server nginx.conf | |

copy: | |

src: ./files/nginx_lb.conf.j2 | |

dest: /etc/nginx/nginx.conf | |

notify: Restart Nginx Server | |

- name: Create TLS Directory | |

file: | |

path: /ssl | |

state: directory | |

owner: root | |

group: root | |

mode: 0755 | |

- name: Unarchive TLS Certificate File | |

unarchive: | |

src: ./files/SSLKEY.zip | |

dest: /ssl | |

creates: /ssl/SSLKEYhmallleasing.com.key | |

creates: /ssl/SSLKEYhmallleasing.com.pem | |

- name: Configure conf.d/proxy.conf | |

copy: | |

src: ./files/proxy_ansible.hmallleasing.com.https.conf.j2 | |

dest: /etc/nginx/conf.d/proxy_ansible.hmallleasing.com.conf | |

notify: Restart Nginx Server | |

- name: Started Nginx Server | |

systemd: | |

name: nginx | |

state: started | |

handlers: | |

- name: Restart Nginx Server | |

systemd: | |

name: nginx | |

state: restarted |

2. 准备 playbook 中 Nginx 依赖的 nginx_lb.conf.j2、proxy_ansible.hmallleasing.com.https.conf.j2 文件

[root@manager web_cluster]# cat files/proxy_ansible.hmallleasing.com.https.conf.j2 | |

upstream ansible_https { | |

server 172.16.1.7:80; | |

server 172.16.1.8:80; | |

} | |

server { | |

listen 80; | |

server_name ansible.hmallleasing.com; | |

return 302 https://$http_host$request_uri; | |

} | |

server { | |

listen 443 ssl http2; | |

server_name ansible.hmallleasing.com; | |

ssl_certificate /ssl/SSLKEY/hmallleasing.com.pem; | |

ssl_certificate_key /ssl/SSLKEY/hmallleasing.com.key; | |

location / { | |

proxy_pass http://ansible_https; | |

proxy_set_header Host $http_host; | |

} | |

} |

3. 准备 playbook 中 Nginx 依赖的 SSL 证书

[root@manager web_cluster]# ll files/SSLKEY.zip | |

-rw-r--r-- 1 root root 4993 Oct 26 21:20 files/SSLKEY.zip |

4. 检查 playbook 语法

[root@manager web_cluster]# ansible-playbook nginx_server_lb_https.yml --syntax-check | |

[root@manager web_cluster]# ansible-playbook -C nginx_server_lb_https.yml |

5. 执行 playbook

[root@manager web_cluster]# ansible-playbook nginx_server_lb_https.yml |

6. 访问测试

# 2.3.7 替换 Nginx 为 Haproxy

1. 编写安装配置 Haproxy 负载均衡的 playbook 文件

[root@manager web_cluster]# cat haproxy_server_http.yml | |

- hosts: lbservers | |

tasks: | |

- name: Unarchive /tmp Directory | |

unarchive: | |

src: haproxy_cfg/haproxy22.rpm.tar.gz | |

dest: /tmp | |

creates: /tmp/haproxy | |

- name: Installed Haproxy | |

yum: | |

name: "" | |

vars: | |

pack: | |

- /tmp/haproxy/haproxy22-2.2.9-3.el7.ius.x86_64.rpm | |

- /tmp/haproxy/lua53u-5.3.4-1.ius.el7.x86_64.rpm | |

- /tmp/haproxy/lua53u-devel-5.3.4-1.ius.el7.x86_64.rpm | |

- /tmp/haproxy/lua53u-libs-5.3.4-1.ius.el7.x86_64.rpm | |

- /tmp/haproxy/lua53u-static-5.3.4-1.ius.el7.x86_64.rpm | |

remote_src: no | |

- name: Configure Haproxy Server | |

copy: | |

src: ./haproxy_cfg/haproxy_http.cfg.j2 | |

dest: /etc/haproxy/haproxy.cfg | |

owner: root | |

group: root | |

mode: 0644 | |

notify: Restart Haproxy Server | |

- name: Started Haproxy Server | |

systemd: | |

name: haproxy | |

state: started | |

handlers: | |

- name: Restart Haproxy Server | |

systemd: | |

name: haproxy | |

state: restarted |

2. 准备 playbook 中 haproxy 配置文件

[root@manager web_cluster]# cat haproxy_cfg/haproxy_http.cfg.j2 | |

#--------------------------------------------------------------------- | |

# Global settings | |

#--------------------------------------------------------------------- | |

global | |

log 127.0.0.1 local2 | |

chroot /var/lib/haproxy | |

pidfile /var/run/haproxy.pid | |

maxconn 4000 | |

user haproxy | |

group haproxy | |

daemon | |

# turn on stats unix socket | |

stats socket /var/lib/haproxy/stats level admin | |

#nbproc 4 | |

nbthread 8 | |

cpu-map 1 0 | |

cpu-map 2 1 | |

cpu-map 3 2 | |

cpu-map 4 3 | |

defaults | |

mode http | |

log global | |

option httplog | |

option dontlognull | |

option http-server-close | |

option forwardfor except 127.0.0.0/8 | |

option redispatch | |

retries 3 | |

timeout http-request 10s | |

timeout queue 1m | |

timeout connect 10s | |

timeout client 1m | |

timeout server 1m | |

timeout http-keep-alive 10s | |

timeout check 10s | |

maxconn 3000 | |

#--------------------------------------------------------------------- | |

# main frontend which proxys to the backends | |

#--------------------------------------------------------------------- | |

frontend web | |

bind *:8080 | |

mode http | |

acl ansible_domain hdr_reg(host) -i ansible.oldxu.net | |

use_backend ansible_cluster if ansible_domain | |

backend ansible_cluster | |

balance roundrobin | |

option httpchk HEAD / HTTP/1.1\r\nHost:\ ansible.oldxu.net | |

server 172.16.1.7 172.16.1.7:80 check port 80 inter 3s rise 2 fall 3 | |

server 172.16.1.8 172.16.1.8:80 check port 80 inter 3s rise 2 fall 3 |

3. 准备 playbook 中 haproxy 安装包

[root@manager web_cluster]# ll haproxy_cfg/haproxy22.rpm.tar.gz | |

-rw-r--r-- 1 root root 2344836 Oct 26 22:07 haproxy_cfg/haproxy22.rpm.tar.gz |

4. 检查 playbook 语法

[root@manager web_cluster]# ansible-playbook haproxy_server_http.yml --syntax-check | |

[root@manager web_cluster]# ansible-playbook -C haproxy_server_http.yml |

5. 执行 playbook

[root@manager web_cluster]# ansible-playbook haproxy_server_http.yml |